Security Now 965 Transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show.

0:00:00 - Mikah Sargent

Hey, I'm Michael Sargent coming in for Leo Laporte Coming up on Security Now. First, we follow up on what happened with CERT. Yes, the listener who talked about a huge, serious security flaw in the website of a major enterprise has some follow-up on speaking to the vulnerability analysis team at CERT. Then we talk about what VMware is dealing with, what Microsoft is choosing to do when it comes to vulnerability disclosure. Here's a hint they're kind of waiting until the end of the week to tell people what's going on, plus China ditching America, at least in terms of its technology. And easily my favorite part of the show, steve Gibson explains why PASCIs are quote far more secure than any super strong password plus any second factor. All of that coming up on Security Now, the podcasts you love From people you trust. This is TWIT. This is Security Now, episode 965, with Steve Gibson and Michael Sargent, recorded Tuesday, march 12, 2024. Pascis versus 2FA.

This episode of Security Now is brought to you by Vanta, your single platform for continuously monitoring your controls, reporting on security posture and streamlining audit readiness. Managing the requirements for modern security programs is increasingly challenging and time-consuming. Enter Vanta. Vanta gives you one place to centralize and scale your security program. You can quickly assess risk, streamline security reviews and automate compliance for SOC2, iso 27001, and more. You can leverage Vanta's market-leading trust management platform to unify risk management and secure the trust of your customers. Plus, use Vanta AI to save time when completing security questionnaires. G2 loves Vanta year after year. Check out this review from a CEO. Vanta guided us through a process that we had no experience with before. We didn't even have to think about the audit process. It became straightforward and we got SOC2 type 2 compliant in just a few weeks. Help your business scale and thrive with Vanta. To learn more, watch Vanta's on-demand demo at vantacom. That's V-A-N-T-Acom. Thanks for watching.

0:03:14 - Steve Gibson

Who look this way.

0:03:22 - Mikah Sargent

Just to watch him go. I like that, I like that. Anyway, it's good to see you.

0:03:30 - Steve Gibson

We have likewise, and thank you for standing in for Leo. He's on a beach somewhere. He says he will come back with a tan, so that's good We'll hope, no skin cancer.

0:03:41 - Mikah Sargent

Yes, exactly A safe tan At our age one considers the downside of being too brown.

0:03:49 - Steve Gibson

Anyway, I had said and suspected or planned to title today's podcast Morris 2 after the sort of well. It was named that way by the guys who created this thing. It is an. It's a way they found of abusing Gen AI to create an internet worm, and, of course, the Morris worm was the very first worm on the internet and is famous for that reason. Anyway, something came up, as sometimes happens, and so we'll be talking about Morris 2 next week, unless something else comes up that pushes it a little bit further downstream. And this is the result of a listener's question, which which caught me a little bit by surprise, because I understand this stuff, because I live in it this being across the network authentication and I thought, okay, I realized that his question was a really good one and it had a really good answer, and so first I put it as the first of our listener feedback questions, but as I began to evolve the answer, I thought no, no, no, this okay, this just has to be something we really focus on because it's an important point. So today's podcast number 965 for March 12th is titled Pass Keys versus two factor authentication, and I would bet that, even for people who think they really understand all this, there may be some nuances that have been missed. So I think it's going to be a great and interesting and useful podcast for everyone, but of course, that's at the end.

We're going to start with whatever happened with that guy who complained to cert about a vulnerability he found in a major website. What a headache has VMware been dealing with just the last few days. What's Microsoft's latest vulnerability disclosure strategy and why does it Well suck? What's China's document 79 all about? And is it any surprise? What long awaited new feature is in version 7.0 of signal, currently in beta but coming out soon?

How is meta coping with the EU's new digital marketing act that just went into effect and requires its messaging platforms to be interoperable with others? Whoops. Also, what's the latest on that devastating ransomware attack on change health care that many of our listeners have said? Hey, steve, did I miss you talking about that or haven't you? I haven't. So it's time, because now we have a lot of information about it and, as you know, as I said, after addressing a lot of interesting feedback from our listeners, which we're also going to make time for, we're going to talk about a few things about past keys that are actually not at all obvious, and, of course, we always have a great picture of the week.

0:07:08 - Mikah Sargent

So I think, overall, a great podcast for our listeners, absolutely, and now is the time to take a quick break to tell you about one of our sponsors on security. Now it's delete me. If you've ever searched for your name online, as I have, and you thought why is there all of this information about me online? Yeah, you're not alone. Delete me is there to help out, though, because delete me helps reduce the risk from identity theft, credit card fraud, robocalls, cybersecurity threats, harassment and unwanted communications. Overall, I have used delete me to help remove a lot of the what I would consider unnecessary information that is out there about me online. There's, you know, the parts of me that are public because of my job, that I want to be public, but then there's the stuff that's there that's not for other people and yet is available if people go looking for it. We've also used the tool here at twit to remove a lot of our CEO's information online, because bad actors have attempted to use that information to try to scam some of the employees at twit. Hey, this is Lisa. Can you buy 12 Apple gift cards and send them to this address? We didn't really fall for it, but you can imagine in a place where maybe they're not as security minded because they don't have a podcast called security now that that could be an issue. So let's talk about how you use delete me.

The first step is to sign up and you give them some basic information for removal. They need that information, that personal information, to know who you are and what they should be looking for. Then delete me. Experts find and remove your personal information from hundreds of data brokers, helping to reduce your online footprint and keeping you and your family safe. And here's where delete me really works so great. It will continue to scan and remove your personal information regularly because those data brokers continue to try to scoop up that data, hold on to it and present it to the folks who are looking for it.

This includes addresses, photos, emails, relatives, phone numbers, social media, property value and so much more. And since privacy, exposures and incidents affect individuals differently, their privacy advisors ensure that customers have the support they need when they need it. So protect yourself and reclaim your privacy by going to join delete me dot com slash twit and using the code twit T W I T. That's join delete me dot com slash twit with the code twit for 20% off. And thank you, delete me for sponsoring this week's episode of security. Now. All right, we are back from the break, and that means it is time for the picture of the week.

0:09:48 - Steve Gibson

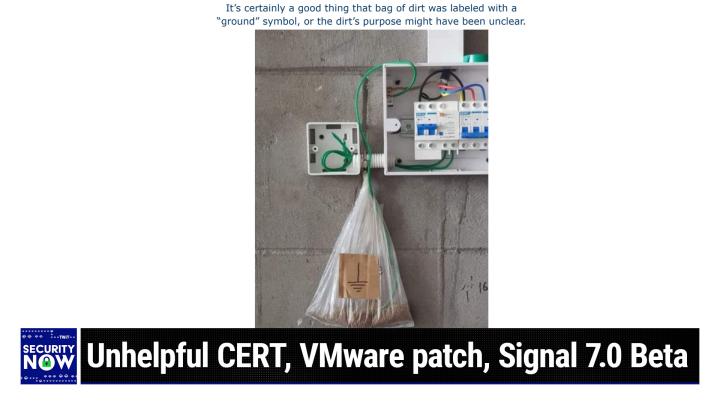

Okay, so, as I said, this relates to one of our more famous earlier pictures of the week, where there was a big AC generator sitting on a factory floor somewhere and next to it was a pale, a large pale of dirt, and a rod was stuck into the dirt, to which was attached a big ground wire and and really it just demonstrates a complete lack of understanding of the nature of grounding something I mean it's not like, I mean the dirt in this first picture. The earlier picture was, you know, in a plastic pale, so it wasn't connected to the earth, which is really what we're wanting to for for a ground to be anyway. Here we have an updated version of the same thing. It looks like some electrical wiring in progress it's not all finished yet the construction, whereas most typical electrical you know, high power wiring are in steel conduit and steel boxes. This is all white plastic. So that suggests that the need for grounding is even more imperative than it would be if this was all in steel, which is probably grounded somewhere anyway, but not here.

So, for reasons that are unclear, a green wire is coming out of this and going into a plastic bag which has been suspended from a plastic piece of conduit and this bag. Sure enough, it's got about an inch worth of brown dirt at the bottom of it and the green wire has been certain to like get all the way down to the bottom of the bag and bury itself in the dirt. And I gave this thing, I gave this picture the caption. It's certainly a good thing that bag of dirt was labeled with a ground symbol or the dirt's purpose might have been unclear and it just, we see, like the equivalent of what looks like a brown post-it note, but somebody went out of their way to draw a very pretty ground symbol.

Yeah, I mean I've, as, because electricity, electronics was my first passion, I've drawn my share of ground symbols in my life and I would have to say this is right up there with the best of them. So, yes, we have a, we have a again.

0:12:38 - Mikah Sargent

it's like I who I who, what, what, what would you trust?

0:12:45 - Steve Gibson

would you trust the wiring of an electrician who did this? I mean, I don't know, it's just it really we have some, we have some pictures that really do you beg the question. What is what happened here?

0:13:00 - Mikah Sargent

Anyway, it's just a question that works all around. That's. It's fantastic but awful.

0:13:06 - Steve Gibson

Oh dear.

Okay, so recall from last week, our listener who discovered a serious flaw in the website of a major enterprise whose site entertains millions, hundreds of millions of users and, in fact, since then, as a consequence of what he forwarded to me and which I redacted, I now know who that site is and what that enterprise is.

And wow.

So the problem was that these people were using a very outdated version of the engine X web server which contained a well-known critical remote code execution vulnerability for which working Python proof of concept code was readily available, which all means that any bad guy who went to any of this company's various websites and there are several who then looked at the headers, which would I, of the response from the website, which would identify the server as engine X and version, could then, as I did and as our listener did, google that version of engine X and say, whoa, there's some problems with that version from four years ago, and that person would be able to find the proof of concept code, as I did, and use it which neither our listener nor I did in order to get inside, crawl inside the web server.

Anyway, serious problem with this major enterprise and their website, and I'll just say that this enterprise is not. It's not like the website happens to be a side effect feature of this enterprise. The nature of the enterprise is such that it is the website I mean like it is like its entire purpose and existence on the planet is this website and others that it also owns.

0:15:25 - Mikah Sargent

So to be clear, it's not as if the website is just a place where you go to learn about its features. The service is the site that you're going to. It's not just a pretty little website.

0:15:34 - Steve Gibson

Yes, it is not Google, but it would be as if it would be like Google having a problem of this magnitude with its servers. You know what I mean. It's like. You know its purpose is its site, essentially, and so it's a big deal. Now our listener, as I described last week, gave this company 120 days to you know, to like deal with it. He contacted them. They replied okay, thank you for notifying us, we'll look into this and get back to you. And they said and it may take four months for us to you know for that to happen. So he waited until 118 days and then said knock, knock, you got two days here.

What's going on? And they said, oh, oh right, well, let's get back to give us a couple more days. So they came back and said, well, we've asked our security people and they're not really thinking that's a big problem. Well, how about this? We'll give you 350 bucks to just shut up and go away, what? And so at that point he tweeted me and he said Steve, what do you think about this? And I said you know you've done everything you can. They're not being very cooperative. Why don't you send a report to CISA, which is, you know, the US's front facility for dealing with, you know, this kind of stuff? So he thought, okay, that's a good idea. So submitted a report, along with his entire email chain, his whole dialogue with these guys, to CISA, which forwards it to cert. Okay, well, you sent me a tweet updating me on what was going on and I have it in the show notes. After I removed the identification of the site that we're all talking about here and this comes back from cert saying greetings, thank you for your vulnerability report submission.

After review, we've decided not to handle the case for two reasons. First, we typically avoid handling or publishing reports of vulnerabilities in live websites. Second, since the vendor is cooperating and communicating with you. There is very little additional action that we can contribute. What, anyway? We recommend working directly with the affected vendor before proceeding with public disclosure. Well, okay, he's never going to disclose this publicly? That's would be a disaster. Feel free to contact us again if you run into problems with your coordination effort or if there's some other need for our involvement. Regards and hit in like giant 50 point type. Yeah, vulnerability analysis team at the CERT coordination center at kbcertorg. Okay, well, that's disappointing. You know they say we typically avoid handling vulnerabilities in live websites. So what? They limit their handling of vulnerabilities to dead websites. You know this doesn't inspire confidence.

0:19:14 - Mikah Sargent

It feels like it is read what the evidence that was provided is like this this feels like when you go and you talk to any tech support person and really jump to assumptions and they don't hear you out first, and then they're providing advice that you're going, but did you not just hear what? I literally just I don't like this.

0:19:31 - Steve Gibson

Sorry, go ahead no no, no, and you're exactly right. Our listeners correspondence, which he had forwarded to CERT with his report, made clear that the company had no intention of doing anything further. So CERT was just passing the buck back to someone who had already demonstrated that he had no leverage. I mean, and that was why I recommended these guys, I figured, if you know, uncle Sam knocks on the door and says, hey, you got a little problem with your web server and it would be bad if you got compromised, that then they might listen because they're not listening to Joe.

So anyway, in my reply to him just now, since he had never disclosed anything publicly and I know he never, would he, you know he's not a bad guy I suggested that since he had done all he could reasonably do and again, never said anything publicly, he should take the money as compensation for his trouble and leave whatever happens up to fate. You know, if the company does eventually get bitten, it will only be their fault. He, you know, he warned them. He didn't. I mean, he even reminded them after four months and they said oh yeah, you.

0:20:56 - Mikah Sargent

Not you again. We thought you just disappear. Why so?

0:21:00 - Steve Gibson

yeah, anyway, can I?

0:21:01 - Mikah Sargent

ask you something I would like to know when is it reasonable, and, as you see it, reasonable and the right thing to do, to disclose things publicly? Not in this situation in particular. I just mean, because I thought that that was something that some security researchers end up doing is, if the company continues not to behave or continues to not, you know, work with them. Isn't a public disclosure, part of the, the bargaining power that the, that the security researcher has?

0:21:33 - Steve Gibson

Yeah, famously like Google, for example. You know you're. You know they find a problem, they notify the vendor and they say we have started a 90 day clock and this, you need to fix this sucker. So if you don't, we're going well, or whether or not you do, we're going to go public with this in 90 days. So take it seriously because, unfortunately, this policy is the result of experiences just like this one, where companies are like you know, we don't think it's that big a problem, okay, great, we're going to let everybody know about it. If it's not that big a problem, hold on, hold on, wait a minute, you know. So you know this guy's not Google. He can't do that.

And, of course, google also has you know, lord knows what kind of a bank of attorneys they have that are able to protect themselves protect themselves, unlike this guy who the last thing he wants is to get stomped on by you know a massive enterprise that you know just turns one of their attorneys loose and says let's go make this guy's life miserable.

0:22:47 - Mikah Sargent

So so, that's the danger that makes sense.

0:22:49 - Steve Gibson

Yeah, so, so. So first of all, they're I mean there. There have been instances where even Google has not followed their own policy, when what they have found has been so egregious that that, like they can't, they can't in good conscience let this go, let this be known publicly, even though they really want to, because it is, you know, it would just, you know, collapse, collapse the world, right. So so you know, you can lead the company to water. You can say, please fix this, won't you? We all want you to. It'll be the world to be a safer place.

And if they but if, ultimately, if they say no, it's like okay, well, you called our bluff because we're not going to tell everybody, because that would be really bad, you know. So good luck, and at least we're going to protect ourselves from you. In fact, this guy was worried that the information this company has about him, due to because he's a subscriber or a member or whatever it is, that that would be at risk. And he asked them in his correspondence like what are you doing about? You know, my privacy concerns, because you've got an insecure website and you know a lot about me, and they never, ever said anything about that.

0:24:11 - Mikah Sargent

So, again.

0:24:13 - Steve Gibson

You know, I guess maybe he could contact Google.

0:24:18 - Mikah Sargent

That would be great.

0:24:19 - Steve Gibson

That might be an idea actually is is contact their, their threat people and say, hey, you know, what do you think about this? If you think it's bad, maybe you ought to give them a 90 day countdown and get this thing really fixed.

0:24:34 - Mikah Sargent

Yeah, that is anyway.

0:24:35 - Steve Gibson

It was a it was a disappointing response, though, from our own government, because this is not a company you want to have attacked. In fact, speaking of which, we'll be talking about change healthcare here in a while, talk about a company you don't want to have attacked. Oh my lord, one in three of the nation's health records were compromised in this. Love that. Love that that's so great. What good possible? What's going on?

Speaking of which, a week ago, on March 5th, broadcom, which is VMware's parent company now, after they bought them, issued a security advisory. It happens to be VMSA 2024 006. It's encouraging that it's got a one-digit number on it 006. That's nice, because now, these days, the CVEs have had to expand to six digits Well, no, actually five, but they used to be four just because there's so many problems happening. This security advisory addresses vulnerabilities that have been discovered in VMware ESXi, vmware Workstation Pro and Player and Fusion. In other words, pretty much everything. If it's got VMware in its name, we got a problem. This is because of a problem in the ubiquitous USB virtualization drivers, which had been found to contain four critical flaws. I didn't dig in to find out who found them, whether they found them, somebody else found them and told them or what, but they are so bad that VMware has even issued patches for previous out-of-service life releases of anything with VMware in its name.

An attacker who has privileged access in a guest OS virtual machine so a root or admin may exploit these vulnerabilities to break out of the virtualization sandbox which, of course, is the whole point of virtualization to access the underlying VMware hypervisor. Using VMware of any kind immediately is the optimal solution, but due to the location of the problem, which is just a specific driver, if anything prevented that from being done and if your environment might be at risk because it includes untrusted VM users, the removal of VMware's USB controllers from those virtual machines will prevent exploitation until the patches can be applied. Vmware said that the prospect of a hypervisor escape warranted an immediate response under the companies what they call there for some reason, it infrastructure library or ITIL. They said in ITIL terms, this situation qualifies as an emergency change necessitating prompt action from your organization. So both the UHCI and XHCI USB controller drivers contain exploitable use after free vulnerabilities, each having a maximum severity rating of 9.3 for workstation and fusion and a base score of 8.4 for ESXi. There's also an information disclosure vulnerability in the UHCI USB controller with a CVSS of 7.1, somebody who has admin access to a virtual machine could exploit IT. That is this other vulnerability to leak memory from the VMX process. Anyway, I've included a link in the show notes to VMware's FAQ about this for anyone who might be affected and interested in more details. Basically, if you've got VMware in your world where somebody running in a virtual machine might be hostile, that's to be taken seriously. If you're just a person at home with VMware because you like to run Win7 in a VM or whatever, then this is not to worry about update it when you get around to it. But anyway, vmware is on alert because of course it is in use in many cloud infrastructures where you don't know who your tenants are, so important to get that fixed.

Unfortunately, microsoft's networks and apparently their customers, remain under attack to this day by that Russian-backed midnight blizzard group. Remember that they were originally named Nobellium and Microsoft said let's call it midnight blizzard because that sounds scarier. I guess this is a group that successfully infiltrated some of Microsoft's top corporate executives' email by attacking the security of a system that had been forgotten. It was in the back room somewhere and it had not been updated to the latest state of the art security and authentication standards. They said whoops, we forgot to fix that.

The sad thing here the saddest thing of all, I think is that Microsoft has apparently believe it or not now taken to dropping their public relations bombs late on Fridays, as happened again this past Friday, and not for the first time. This is now their pattern. The Risky Business security newsletter explained things by writing this. They said Microsoft says that Russian state-sponsored hackers successfully gained access to some of its internal systems and source code whoops repositories. The intrusions are the latest part of a security breach that began in November of last year and which Microsoft first disclosed in mid-January. Initially, the company said hackers breached corporate email servers and stole inboxes from the company's senior leadership, legal and cybersecurity teams. In an update on the same incident posted late Friday afternoon, as is the practice of every respectable corporation.

0:31:44 - Mikah Sargent

That sarcasm for those who are not making up on that.

0:31:46 - Steve Gibson

That sarcasm. By the way, microsoft says it found new evidence over the past weeks that the Russian hackers were now weaponizing the stolen information. The Redmond-based giant initially attributed the attack to Midnight Blizzard, a group also known as Nobellium. That's right, but before Microsoft gave them a scarier name one of the cyber units inside Russia's foreign intelligence service. Microsoft says that since exposing the intrusion, midnight Blizzard has increased its activity against its systems. Well, and how? Per the company's blog post, midnight Blizzard password sprays increased in February by a factor of 10, compared to the already large volume it saw in January of 2024. Furthermore, risky business notes, if we read between the lines, the group is now also targeting Microsoft customers.

While Microsoft legalize makes it clear that no customer facing systems were compromised, the company weasely confirmed that customers have been targeted. Okay, what does that mean? Emails stolen by the Russian group also contained infrastructure secrets. You know, secret tokens, passwords, api keys, etc. Which Midnight Blizzard has been attempting to use. So it's not good that Russians managed to compromise Microsoft's executives' email in the first place and that the information they stole is now being leveraged to facilitate additional intrusions, including, apparently, the exfiltration of Microsoft's source code repositories. That's not good for anyone, but, as we know, I always draw a clear distinction between mistakes and policies. Mistakes happen. Certainly a big one happened here, but the new policy that caught my eye was that, unfortunately, we're now seeing a pattern develop of late Friday afternoon disclosures of information which Microsoft hopes will be picked up and published when fewer people are paying attention.

0:34:12 - Mikah Sargent

So yep, that's that's that's having worked in journalism for ages. That is well ages, for a long time. That is how old are you exactly how many?

0:34:26 - Steve Gibson

eight, how many years ago one age at least.

0:34:29 - Mikah Sargent

Okay, but yes, it is. That's something that we always pay attention to. When is that coming out and what is the messaging behind there? And the fact that they're waiting until Friday afternoon is a clear signal that they don't want people to see it, and that is wrong when it comes to security disclosures. And luckily you've got your eye on it and that means we can get to it on Tuesday. You can let it go on Friday, microsoft, but we'll pick it up on Tuesday.

0:35:00 - Steve Gibson

Well, yes, and it's also relevant that this was not like any kind of an emergency announcement that had to go out on Friday afternoon. I mean, this was just like, well, we're updating you on the status, but we'd rather you didn't receive this. Yeah, so so.

0:35:21 - Mikah Sargent

So here it is Right.

0:35:23 - Steve Gibson

Okay, so the official name for Beijing's secret directive is document 79. But it is informally known as the delete a order, where a is understood to stand for America. Yes, china wants to delete America. The secret directive was issued several years ago, back in September of 2022. It's designed to remove American and other non Chinese hardware, although, apparently, since we get the a, we figure prominently, and so to remove to. To remove American and other non Chinese hardware and software from its critical sectors. The directive mandates that state owned companies replace all US and other non Chinese equipment and software in their IT systems by 2027. So you got three years left, folks, and that was a five year order that began two years ago. So far, the affected companies include Cisco, ibm, dell, microsoft and Oracle, and my reaction to this is yeah, who can blame them? Yeah, you know, with tensions on the right.

With tensions on the rise between the US and China, this only makes sense. The US has done the same thing for the manufacturers of Chinese made security cameras within like sensitive areas, like military bases and protected corridors of power, and we know that Russia is working to remove American technological influence from inside its borders. The problem for all the parties involved is that today's systems are so complex that Trojan capabilities are readily hidden and can be made impossible to find. No one doubts the influence that Chinese intelligence services are able to exert over the design of Chinese equipment, and there's long been some question about just how much influence US intelligence services might have over the installation of such backdoors in proprietary software. There was years and years ago there was a key inside of windows that, purely by coincidence, had the initials NSA, and it was like oh my God, you know it's like folks, if it was an NSA key, they would have changed the name.

0:38:09 - Mikah Sargent

So it would not be that obvious.

0:38:11 - Steve Gibson

But but it was like, oh my God, you know, I mean it. Just everyone was all on Twitter if you'll pardon the joys of words over. You know that the NSA had some secret key buried in windows. No, but, which is not to say they don't Like, why wouldn't they? But they wouldn't leave the name the same. So, anyway it, to my way of thinking, I just wish we could all get along. You know it's unfortunate to be seeing this pulling apart. You know, and this rising global tension, you know, because ultimately it is less economically efficient for us all to have to, like you know, retrench and like we have to now be making chips in the US, when China was so good at it, and like what's the problem? Well, you know, it could be a problem, so ultimately it's hardly surprising, but I just really regard it as unfortunate. Okay, and I'm keeping my eye on the time, but this is going to be a long podcast because we have a lot of good stuff to get to, so I'm going to do one more before we take our next break. That's good.

Signals, reliance signal. You know the messaging app like the most the best one there is, that is cross platform. I will argue that I message is good if you're an iOS world, but signal if you need other stuff. Their reliance upon physical phone numbers for identifying the communicating parties has been a long standing annoyance, as well as a concern for privacy and security. Researchers who've long asked the company to switch from phone numbers to usernames in order to protect users identities. The just announced version seven of signal will add the creation of temporary username aliases. So here's how signal explains it.

In their announcement they said signals mission and soul focus is private communication. For years, signal has kept your messages private, your profile information like your name and profile photo private, your contacts private and your groups private. Notice the word private figuring prominently in that single sentence. They said, among much else Now we're taking that one step further by making your phone number on signal more private. Here's how If you use Signal, your phone number will no longer be visible to everyone you chat with by default, and I props to them for changing this.

Many, many organizations will add a feature, but they're afraid to change the behavior. It's like oh well, you know we don't want to like freak everyone out, so we're, we got it now, but we're going to have it turned off and you got to turn on if you want it. No, signal says we're just. Those phone numbers are going to disappear, so get over it. They said people who already have your number saved in their phones contacts will still see your phone number, since they already know it.

If you don't want to hand out your phone number to chat with someone on Signal, you can now, as of seven, which is in beta, so it'll be available within a few weeks. They said you'll be able to create a unique username that you can use instead. They said you'll still need a phone number to sign up for Signal. They said note that a username is not the is not the profile name that's displayed in chats. It's not a permanent handle. That's why I'd referred to it earlier as an alias. And they said and not visible to the people you're chatting with in Signal.

A username is simply a way to initiate contact on Signal without sharing your phone number. If you don't want people to be able to find you by searching for your phone number on Signal, you can now enable a new optional privacy setting. This means that unless people have your exact unique username, they will not be able to initiate a conversation or even know that you have a Signal account, even if they do have your phone number. These options are in beta, they said, and will be rolling out to everyone in the coming weeks, and once these features reach everyone, both you and the people you're chatting with on Signal will need to be using the most updated version of the app to take advantage of them. Also, all of this is optional. While we change the default to hide your phone number from people who don't already have it saved in their phones contacts, you can change this setting. You're not required to create a username and you have full control over whether you want people to be able to find you by your phone number or not. Whatever choices work for you and your friends, you'll still be able to communicate with your connections in Signal, past and present.

So that seems all good. You know they've changed to hiding phone numbers by default and they've added an optional textual username as a phone number alias. Signal knows the phone number behind the alias, but users do not. I think that's pretty slick. Their blog posting about this contains a great deal more information. Basically, it's a complete user guide to how to work with this coming feature. I put it in this week's show notes, since it might be a dangerous to anyone who uses Signal often. So you can click the link in the show notes and you'll be taken to their page. It's phone number, privacy, hyphen, user names. So you could also probably Google that if you want to just jump to that Signal site. So anyway, nice improvement coming from Signal.

0:44:26 - Mikah Sargent

Vee Vee in the Discord says I set it up last week and I love it. This is such welcome news. So we've already got some folks who have enabled these. You know the new username feature, as you pointed out, or call it an alias, I think is a good way of putting it, given the, and it's clear that you know you when you can tell.

I think of when I used to post on X, formerly known as Twitter, in the past, and I would have a tweet and then I'd have about three things underneath it that are sort of responses to how all of the pedantic people on Twitter will probably take my post and say you didn't say this or you forgot to say that. So this feels like that they're answering every possible question that folks might have and, as you said, there's a full user guide and I think that's smart, given the their user base, right, they're going to be lots of concerns about any change taking place and what it does, what it doesn't do. So good on them, or good on it, I guess, for figuring out what needs to be said.

0:45:25 - Steve Gibson

Yeah, basically they've created a mapping on top of the phone numbers, where you know a little dictionary, where a user can assign themselves an alias and then say to somebody hey, contact me on signal at this user name, you know, and it's going to be you know, a screwy bumblebee or something, but which is not their phone number. But it doesn't need to be, it just needs to be something else and that allows the. So so, beneath the covers, signal knows when somebody says, hey, I want to connect a screwy bumblebee, that actually means this phone number, but the. But the user making the connection never sees that.

0:46:08 - Mikah Sargent

Yeah, as you said, very slick. I think that's a great way to do it. All right. Does that mean it's time for a break? It does, all right.

I am like a sergeant subbing in for Leo Laporte while he's on vacation here with the wonderful and wise Steve Gibson. I want to take a break to tell you about collide, who are bringing you this episode of security now. You have heard us talk about collide before, but have you heard that collide was just acquired by one password? Well, if you didn't, they have been. That's pretty big news, since these two companies are leading the industry and creating security solutions that put users first.

For more than a year, collide device trust has helped companies with Okta ensure that only known and secure devices can access their data, and that's what they're still doing, but just now as part of one password. So if you've got Okta and you've been meaning to check out collide, now is a great time. Collide comes with a library of pre built device posture checks and you can write your own custom checks for just about anything you can think of. Plus, you can use collide on devices without MDM, like your Linux fleet contractor devices and every BYOD phone and laptop in your company. Now that collide is part of one password, it's only getting better. Check it out at collidecom slash security now to learn more and watch the demo today. That's K, o, l, I, d, e, dot com slash security now, and we thank collide for sponsoring this week's episode of security. Now All right back from the break and we are continuing on with a great information packed episode of security now, always information packed, so maybe I should say information stuffed.

0:47:55 - Steve Gibson

There was a neat little cartoon that was running on the collide.

0:47:58 - Mikah Sargent

It's pretty cool, I had not seen it before.

0:48:00 - Steve Gibson

Yeah, it's, it's good, okay. So last Thursday, the 7th, the European Union's DMA, the Digital Markets Act, went into force. Among many important features, it requires interoperability among specified, probably large, instant messaging systems. So here's what meta recently explained about their plans. I'll give everybody a quick hit. It amounts to if you want to talk to us, you got to use the signal protocol, but we'll meet you halfway by making it possible for you to connect with our previously closed servers. So meta wrote to comply with the new EU law, the Digital Markets Act, which comes into force on March 7. We've made major changes to WhatsApp and Messenger to enable interoperability with third party messaging services. We're sharing how we enabled third party interoperability, which they were shortened to interop, while maintaining end to end encryption and other privacy guarantees in our services as far as possible.

On March 7, a new EU law, the Digital Markets Act, comes into force. One of its requirements is that designated messaging services must let third party messaging services become interoperable, provided the third party meets a series of eligibility, including technical and security requirements. And that's, of course, the key, because, if not, meta is able to say no, no, no, not so fast. This allows, they wrote, users of third party providers who choose to enable interoperability to send and receive messages with opted in users of either Messenger or WhatsApp, both designated by their European Commission as being required to independently provide interoperability to third party messaging services. For nearly two years, our team has been working with the European Commission to implement interop in a way that meets the requirements of the law and maximizes the security, privacy and safety of its users. Interoperability is a technical challenge, even when focused on the basic functionalities as required by the DMA. In year one, the requirement is for one to one text messaging between individual users and the sharing of images, voice messages, videos and other attached files between individual end users. In the future, requirements expand to group functionality and calling To interoperate. Third party providers will sign an agreement with Messenger and or WhatsApp and will work together to enable interoperability. Today we're publishing the WhatsApp reference offer for third party providers, which will outline what will be required to interoperate with the service. The reference offer for Messenger will follow in due course.

While Meta must be ready to enable interoperability with other services within three months of receiving a request, it may take longer before the interfunctionality is ready for public use. We wanted to take this opportunity to set out the technical infrastructure and thinking that sits behind our interop solution. Our approach to compliance with the DMA is centered around preserving privacy and security for users as far as is possible. The DMA quite rightly makes it a legal requirement that we should not weaken security provided to Meta's own users. The approach we've taken in terms of implementing interoperability is the best way of meeting DMA requirements whilst also creating a viable approach for third party providers interested in becoming interoperable with Meta and maximizing user security and privacy.

First, we need to protect the underlying security that keeps communication on Meta end-to-end encrypted messaging apps secure. The encryption protocol. Whatsapp and Messenger both use the tried and tested signal protocol as a foundational piece for their encryption. Messenger is still rolling out end-to-end encryption by default for personal communication, but on WhatsApp, this default has been the case since 2016. In both cases, we are using the signal protocol as the foundation for these end-to-end encrypted communications, as it represents the current gold standard for end-to-end encrypted chats. In order to maximize user security, we would prefer third party providers to use the signal protocol. Since this has to work for everyone, however, we will allow third party providers to use a compatible protocol if they're able to demonstrate it offers the same security guarantees as signal.

I'll just interrupt here to say that will be a high bar. Signal is open source. It's an open protocol. Why in the world would anybody roll their own from scratch and say, oh well, like Telegram, that's got a bizarro protocol that nobody has ever understood and no one's ever tried to prove a secure, because it's just garbage. I mean, it's scramble stuff, so maybe it's secure, but it's nice to have proofs, and these days we can get proofs, except not for random stuff.

Somebody just made up, and so we said, to send messages, the third party providers have to construct message protobuf structures, which are then encrypted using the signal protocol and then packaged into message stanzas in XML you know the extensible market language and so the servers push messages to connected clients over a persistent connection.

Third party servers are responsible for hosting any media files their client applications send to meta clients, such as image or video files, using a media message. Meta clients will subsequently download the encrypted media from the third party messaging servers using a meta proxy service. It's important to note that the end to end encryption promise meta provides to users of our messaging services requires us to control both the sending and receiving clients. This allows us to ensure that only the sender and the intended recipient can see what's been sent and that no one can listen to your conversation without both parties knowing. Now, just to decrypt that corporate speak, what they're saying is if we don't control the sending and receiving clients, which is to say, if we are going out of our own, what am I looking for? Not out of band, out of there's a current there's a well known out of pocket, out of environment out of.

Yeah, out of environment, then we don't, we can't make any representations about what happens to the message that you send out of our services or where those messages come from into our services. So so that's sort of them, just sort of you know, stepping back, saying you know only if you stay, you know, within a messenger and WhatsApp are we, are we willing and can we take responsibility over the messages that are being transacted. So you know, beware. Anyway. They finish saying while we build a secure foundation for interop that uses the signal protocol encryption to protect messages in transit without ownership of both clients and points, we cannot guarantee what a third party provider does with sent or received messages and we therefore cannot make the same promise. So again, they just said what I just said. I didn't realize that was coming With those anyway with those last two paragraphs.

0:56:42 - Mikah Sargent

are they talking to? Do you think they're talking to the potential services that are trying to connect and explaining their thinking here? Or is this meta, not being absolutely certain how the EU will take this choice to use signal and kind of push signal, and so they're almost trying to explain to them? Look, we know that it would be easier if we would just open it up to all of them, but the you know, they're sort of going through and explaining why signal is the best. It's just, I guess what I'm asking is it kind of runs contrary to what they, what, what the company said early on about having worked with the EU for so long on figuring this out. If what they're trying to do there is like come on, you don't punish us further, we promise this works. Do you think this is more for those other third party messaging platforms to go? Look, we know that you might want to use something else, but it makes sense why we're doing this and why we have to have control of the sending and receiving.

0:57:46 - Steve Gibson

So my ignorance is showing here. Can non Facebook users use WhatsApp? I know messenger is Facebook only.

0:57:57 - Mikah Sargent

That is a really good. I don't think you need a Facebook account to have WhatsApp, but that's a good question.

0:58:02 - Steve Gibson

I think that's correct. So so my feeling is, you know, yes, other messengers might want to hook up, but, you know, even if you didn't have WhatsApp, the most obvious messaging system to connect would be signal itself. Right, why wouldn't signal that you know invented this signal protocol say, hey, yeah, we'll. You know that sounds great, we'd like to be able to. You know, allow Facebook messenger users and WhatsApp to. You know, interact with signal users. So you know, signal themselves would be the obvious. You know interop choice, but you know, again, telegram is going to have a hard time. I mean, there are lots of other.

You know, when I was talking last week about the various levels level zero, one, two and three that Microsoft sorry that Apple has assigned to messaging, there were some down at level zero, where, where encryption was optional that I'd never heard of, like QQ, what's that? But you know that's apparently some messaging app somewhere. So you know again, if you know, any other company is going to have a heavy lift. And basically, I think what this means is you know we're using signal at Meta and anybody else who wants to talk with us is going to have to do it too, Because, again, signals bar is very high. Well, in fact, they just added post quantum encryption in signal, so you know you're going to if what Meta is saying is we'll connect to you if you're as good as signal. Well, that now means if you also have post quantum encryption available.

0:59:58 - Mikah Sargent

Basically, it is saying you're going to need to use signal because you're not going to be able to prove to us that anything else is.

1:00:05 - Steve Gibson

It won't be as good if it's not signal, because signal is the best, unless you ask Apple and they think, well, signal is a level two, you know we're. Level three is like okay, fine. So because signals rotating, because Apple is rotating their keys every 50 messages, or every week, which either. Whichever comes first, right. So they're, and they're saying, oh, that gives us an advantage. So okay, that's okay.

1:00:35 - Mikah Sargent

Yeah, I don't know. I'm with you in that. I don't think that should bump it up to level three. But at the same time, when I was reading through that explanation of everything, I did think that was cool because I hadn't considered, how you know, having somebody's whole history of messages, even in a post quantum world, using the same key, using the same key, that that means the whole thing, but this just means they only get access. That that's pretty smart. That would be cool to see implemented.

1:01:03 - Steve Gibson

Yeah, I agree with you. It's like, well, why not do it if we can? And it was expensive to do it. You know they had to amortize that key across many messages, because the keys to K and many messages are like okay, mom, I'm on my way, you know so. So so the per message overhead of 2k, that was insane. Yeah, you know when, when the message is just 12 characters. So, anyway, okay.

So a lot of our listeners have asked me about this, this big cyber attack, ransomware attack on the US's change health care service Nearly three weeks ago, on Wednesday. So three weeks ago tomorrow, on Wednesday, february 21. The American company change health care, which is a division of United Health Group, also known as UHG, was hit by a ransomware attack that was devastating. By any measure that. That cyber attack shut down the largest health care payment system in the US. Then, to give you a sense for this, just this past Sunday, two days ago, the US Department of Health and Human Services addressed an open letter, to quote health care leaders writing as you know, last month, change health care was the target of a cyber attack that has had significant impacts on much of the nation's health care system. The effects of this attack are far reaching Change. Health care owned by United Health Group UHG processes 15 billion with a B health care transactions every year and is involved in one of every three patient records. The attack has impacted payments to hospitals, physicians, pharmacists and other health care providers across the country. Many of these providers are concerned about their ability to offer care in the absence of timely payments, but providers persist despite the need for numerous worker rounds no, I'm sorry, numerous owner's worker rounds and cash flow uncertainty. So this is really upset a lot.

Okay, so backing up here a bit, they've the day following the attack, february 22. This United Health Group filed a notice, as they must with the US Securities and Exchange Commission because they're a publicly traded company, stating that quote a suspected nation state associated cybersecurity threat actor had gained access to change health care networks. Following that UHG filing, cvs Health, walgreens, publix, goodrx and Blue Cross Blue Shield of Montana reported disruptions in their insurance claims. Now, basically, it was all shut down. The cyber attack affected family-owned pharmacies and military pharmacies, including the Naval Hospital at Camp Pendleton. The healthcare company Athena Health was affected, as were countless others.

One week later, on the 29th of February you know, leap year day UHG confirmed that the ransomware attack was quote perpetrated by a cyber crime threat actor who represented itself to change healthcare as AlfV slash Blackcat. I'm just going to refer to them as Blackcat from now on because it's easier. In the same update, the company stated that it was quote, working closely with law enforcement and leading third-party consultants, mandiant and Palo Alto Networks, to address the matter. And then, four days later, that is, after this disclosure, on the 29th, which is eight days ago, on March 4th, reuters confirmed that a Bitcoin payment equivalent to nearly $22 million US dollars had been made into a cryptocurrency wallet quote associated with Blackcat, they paid.

1:05:35 - Mikah Sargent

we think they paid the ransom. Oh my God.

1:05:39 - Steve Gibson

Yeah, blackcat Health has not commented on the payment, instead stating that the organization was quote focused on the investigation and the recovery Right, apparently to the tune of 22 million US dollars. On the same day, a reporter at Wired stated that the transaction looked very much like a large ransom payment. It's transpired since then is a bit interesting, since Blackcat is a ransomware as a service group. Okay. This of course means that they provide the software and the back-end infrastructure, while their affiliates are the ones that perpetrate the attacks, penetrate the networks and in return for that, the affiliate receives the lion's share in this case 70%, of any ransoms paid. That's always been the way it is. However, in this instance, it appears that Blackcat is not eager to part with that 70%, which amounts to a cool $15.4 million. So they're claiming that they've shut down and disbanded. What Nice timing on that, okay. So exactly one week ago, the HIPAA you know HIPPA, the US organization, the HIPAA Journal, posted some interesting information. They wrote the alpha.

1:07:20 - Mikah Sargent

Oh sorry, steve, can I correct you there it's HIPAA, not HIPAA. Oh good, thank you. Journal.

1:07:26 - Steve Gibson

Yes, not good. Thank you, hipaa. Thank you, so they said. The Alph V Blackcat Ransomware Group appears to have shut down its ransomware as a service or AAS operation, indicating there may be an imminent rebrand. The group claims to have shut down its servers. Its ransomware negotiation sites are offline and a spokesperson for the group posted a message. Quote everything is off, we decide. Unquote Probably a Russian speaker. A status message of GG was later added and Blackcat claimed that their operation was shut down by law enforcement and said it would be selling its source code.

However, security experts disagree and say there is clear evidence that this is an exit scam where the group refuses to pay affiliates their cut of the ransom payments and pockets 100% of the funds. Blackcat is a ransomware as a service operation where affiliates are used to conduct attacks and are paid a percentage of the ransom they generate. This typically receive around 70% of any ransoms they generate and the ransomware group takes the rest. After the earlier disruption of the Blackcat operation by law enforcement last December, blackcat has been trying to recruit new affiliates and has offered some affiliates an even bigger cut of the ransom. An exit scam is the logical way to wind up the operation and there would likely be few repercussions other than making it more difficult to recruit affiliates. And if the group were to choose to rebrand who?

is going to do this again. If you just screwed the last affiliate that you were working with after they generated a $22 million ransom, hello, we are.

1:09:36 - Mikah Sargent

Whitecat and we have no affiliation to. Blackcat, would you like to work with us?

1:09:42 - Steve Gibson

Maybe Darkblack or Darkgray. Anyway, hipaa wrote it's not unusual for a ransomware group to shut down operations and rebrand after a major attack, and Blackcat likely has done this before. Blackcat is believed to be a rebrand of the earlier Blackmatter ransomware operation, which itself was a rebrand of Darkside.

1:10:13 - Mikah Sargent

Okay, if they have something shadows, dark, black, any of you know it's the same company people going forward, that's right.

1:10:20 - Steve Gibson

Come on that's right. Darkside was a ransomware group behind the attack on the Colonial Pipeline in 2021 that disrupted fuel supplies on the Eastern seaboard of the United States. Shortly after the attack, the group lost access what do you know? To its servers, which they claimed probably was due to the actions of their hosting company. They also claimed that funds had been transferred away from their accounts and suggested they were seized by law enforcement. Blackmatter ransomware only lasted for around four months before it was, in turn, shut down, with the group rebranding in February of 2022 as Blackcat.

On March 3 of this year, an affiliate and here it comes with the moniker Nachi N-O-T-C-H-Y. Nachi posted a message on Ramp Forum claiming they were responsible for the attack on change healthcare. The post was found by a threat researcher at Recorded Future. Nachi claimed they were a long-time affiliate of the Blackcat operation and that could have gone back two years, because that's when Blackcat reformed, had the affiliate plus status, granting them a larger piece of the pie, and that they had been scammed out of their share of the $22 million ransom payment. They claimed that Optum, which is the actual organization within change that was hit and paid Optum, paid a $350 Bitcoin ransom to have the stolen data deleted and to obtain the decryption keys, in other words, full-on standard ransomware payment. Nachi shared the payment address, which shows a $22 million. Payment had been made to the wallet address and the funds have since been withdrawn. The wallet has been tied to Blackcat as it received payments for previous ransomware attacks that have been attributed to the group. Nachi claimed Blackcat suspended their account following the attack and had been delaying payment before the funds were transferred to Blackcat accounts. Nachi said that Optum paid to have the data deleted, but they have a copy of six terabytes. That's how much was stolen of data in the attack. Nachi claims that data includes sensitive information from Medicare, tricare, cvs, caremark, loomis, datavision, healthnet, metlife, teachers Health Trust, tens of other insurance companies and others. The post finishes with a warning to other affiliates that they should stop working with Blackcat. It's unclear what Nachi plans to do with the stolen data and whether they will attempt to extort, change health care or try to sell or monetize the data.

Fabian Wosar, msissof's CTO, is convinced this is an exit scam. After checking the source code of a law enforcement takedown notice, he said it is clear that Blackcat has recycled it from December's earlier takedown notice. Fabian tweeted quote there is absolutely zero reason why law enforcement would just put a saved version of the previous takedown notice up during a seizure instead of a new takedown notice unquote. He also reached out to contacts at Europol and the NCA who said they had no involvement in any recent takedown. Currently, neither Change Health Care nor its parent company, unitedhealth have confirmed that they paid the ransom and then been cheated out of it, and issued a statement that they are currently focused on the investigation. So yeah, this is all a big mess.

It appears that the Blackcat gang has made off with Optum's $22 million. That Nachi affiliate did not receive the $15.4 million or more that they feel they deserve. Optum got neither the decryption keys nor the deletion of their six terabyte of data which they paid for, and no one but the Blackcat guys are smiling at this point. This rebranding and returning to the ransomware as a service business may be impossible after taking their affiliates money, and since $22 million is a nice piece of change, they may just go find the nice beach somewhere to lie on, one hopes. Meanwhile, here in the States, the inevitable class action lawsuits have been filed due to the loss of patient health care records. At last count, at least five lawsuits are now underway.

1:16:03 - Mikah Sargent

Oh, my word, this is awful.

1:16:06 - Steve Gibson

All the way around. This is awful Big mess.

1:16:09 - Mikah Sargent

It's a huge mess and it's I don't know. It kind of speaks to the dangerous nature maybe of having the entire country's nearly the entire country's health care operating under one company. That that much can be impacted by getting into one company is pretty scary.

1:16:29 - Steve Gibson

Yes, they are a service provider and so all kinds of other of their clients use them to provide the insurance processing and, in return the payments back to them, and all of that is shut down now they don't have their decryption keys. All of their server infrastructure is encrypted. They were willing to pay $22 million to get it back online more quickly. We don't know anything about the state of their backups and so forth, but even so, six terabytes of medical record history is now also in the hands of bad guys.

This is the unfortunate downside of the US's free enterprise system, which, I'm you know, has lots of upsides, because it allows people to apply their efforts and to be clever and to create companies. Unfortunately, there's a tendency for the big fish to eat the small fish and for consolidation to happen. So what you? What we're seeing here is, as you said, all what would have otherwise been a much more distributed set of services have all been consolidated. You know, on one hand, yes, it's more efficient, you're getting to use one larger set of infrastructure instead of lots of smaller infrastructures. But with it comes responsibility. With that consolidation comes responsibility, and what we're now seeing is what happens when one could argue that responsibility was not met.

1:18:17 - Mikah Sargent

With great power. They say, yes, yeah, that's yeah. I did so many, so many records, that's. You said what?

1:18:27 - Steve Gibson

for every three, it's two of every three, one out of every three of medical records in the US is basically in that six terabytes of data. Yeah, yeah, I'm awful. On my side, everything continues to proceed well with spin right. The limitations of 6.1, you know, being unable to boot UEFI only systems, and his lack of native high performance support for drives attached by USB and NVMe media, are as annoying as I expected that they would be. So, you know, there just wasn't anything I could do about it. I'm working to get 6.1 solidified, so specifically so that I can obsolete it with 7.0 as soon as I possibly can. But, that said, I still do want to solve any remaining problems that I can, especially when such a solution will be just as useful and necessary for tomorrow's spin right 7 as it is for today's 6.1. And that's the case for the forthcoming feature I worked on all of last week.

It's something I've mentioned before, which I've always planned to do, and that's to add the capability for people without access to Windows mostly Linux users, who you know are Linux users and so don't have Windows to directly download an image file that they can then transfer to any USB drive to boot their licensed copy of spin right. For those who don't know, the single spin right executable, which is about 280K, because of course I write everything in assembly language, is both a Windows and a DOS app. When it's run from DOS, it is itself spin right, but when that same program is run from Windows, it presents a familiar Windows user interface which allows its owner to easily create bootable media which contains itself. So it can, you know that can either format and prepare a diskette you know, which there's not much demand for, but since the code was there anyway from 6.0, I left it in or it can create an optical disk's ISO image file for burning to a disk or loading with an ISO boot utility. It can also create a raw IMG file for or you know, which can be put somewhere, or it can prepare a USB thumb drive.

20 years ago, back in 2004, when I first created this code, the dependence upon Windows provided a comprehensive solution, because Linux was still mostly a curiosity back in 2004 and had not yet matured into the strong alternative OS that it has since become. Today there are many Linux users who would like to use Spinrite, but who don't have ready access to Windows in order to run Spinrite's boot prep, and this need for non-Windows boot preparation will continue with Spinrite 7 and beyond. The approach I've developed for use under Windows, which is used by init, disk read speed and Spinrite, is to start the application, then have its user insert their chosen USB drive, while the application watches the machine for the appearance of any new USB drive. This bypasses the need to specify a drive letter. It works with unformatted drives or drives containing foreign file systems, so they wouldn't get a drive letter, and it makes it very difficult for the user to inadvertently format the wrong drive, which was my primary motivation for developing this user-friendly and pretty foolproof approach.

Unfortunately, there's a downside. It uses very low-level Windows USB monitoring, which was not implemented in WINE, which is the Windows emulator for Linux. So for Linux users a ready-to-boot image file is the way to go, and I'm in the process of putting the final pieces of that new facility together. It should be finished later this week and I'm sure I'll just make a note of it next week that you can go use it and let's take our last break and then we'll do some feedback from our listeners and get to our episode's main topic.

1:23:22 - Mikah Sargent

Wonderful, all right, I do want to take a moment here. By the way, micah Sargent, subb again for.

1:23:28 - Steve Gibson

Leo Port who is on vacation?

1:23:30 - Mikah Sargent

We knew that. Let me tell you about our next sponsor of Security. Now it's brought to you by your digital guardian, eset. With ransomware we were just talking about that Data breaches and cyber attacks on companies becoming increasingly prevalent in the world, it's important to have proactive security in place, ready to stop threats before they happen.

On average, it takes 277 days to identify and contain a security breach. On average, and during a breach. Time is your enemy and it's important to act fast. Consider ESET-MDR, your managed detection and response service that brings threat management right to your doorstep, tailored to fit the size of your business or your current cybersecurity needs. With ESET-MDR, you'll enjoy 24-7 cybersecurity coverage with a potent blend of AI-driven automation and human expertise, bolstered by cutting edge threat intelligence, and with professional support backed by ESET's teams of renowned researchers, resolving issues become a manageable task. Let ESET-MDR help you save time, resources and money. Go to businessesetcom slash twit now to optimize your security with ESET's MDR service. Thank you, eset, for sponsoring this week's episode of Security. Now we are back from the break and that means it's time to close the loop with feedback from the listeners.

1:25:01 - Steve Gibson

Yes, it does. Okay, so John Robinette said hey, steve, I'm sure I'm not the only one to send you this note about Telegram After listening to SN964,. There's been much chatter about the protocol Telegram uses for end to end encryption, but it is a common misunderstanding that they use this by default. Telegram's default uses only TLS to protect the connection between your device and their servers and does not provide any end to end protection. They have an additional feature secure chats that does use end to end encryption on the client device. It is not possible to use end to end encryption in group chats and when used for one-on-one chats, it limits the conversation to a specific device. Based on my anecdotal experience using Telegram with a few friends, most people either do not know about secret chats or do not use it. I found a post from 2017 that explains Telegram's reasoning for this and he quoted it saying the TLDR is because other apps, for example WhatsApp, allow you to make unencrypted backups. This is Telegram speaking. Essentially, actual end to end encryption isn't worth being on by default, so they don't turn it on by default and claim they are more secure because of how they store backups. He says anyway it's not just Apple marketing speak that Telegram is not end to end encrypted. It's by design from Telegram.

Thanks, wow, thank you, and I should mention several other of our listeners who actually use Telegram also shared their experience. What they showed was that, unlike the way I presumed it would be when I talked about it last week, telegram really is probably mostly used in its insecure messaging mode, since, you know, not only is it not enabled by default, it's not, you know, enabling it is not even a global setting. It must be explicitly enabled on a chat by chat basis. So that makes the use of encryption hostile, you know, in Telegram, which is certainly not what anyone hoping for privacy wants, you know, and its fundamental inability to provide end to end protection for multi-party group chats strongly suggests that you know it's being left behind in the secure messaging evolution. So I presume that they're aware of this and they're hopefully, excuse me working behind the scenes to bring Telegram up to speed, since otherwise it's just going to become a historical footnote. Given these facts, I certainly reverse myself and agree with Apple's placing Telegram down at level zero, along with QQ, whatever. That is where it certainly belongs. So thank you very much, john, and everybody else, for educating me about Telegram, which I did not take the time to, and I'm amazed, for all the talk of Telegram, that it is as insecure as it obviously is. Someone who's tweeted before whose handle is a hacker version of anomaly it's EN0M41Y, he said.

Steve, keypassxc latest release is also supporting pass keys now great news. Okay, I'm not a pass key user, so I don't know what communication they may provide to their users, so I wanted to pass along that welcome news. Back when pass keys first appeared, supported only by Apple, google and Microsoft, they each created their own individual and well-isolated walled gardens to manage pass keys only on their devices and only within their own ecosystems. There was no cross-ecosystem portability. At that time, we hoped that our password managers, which are already an inherently cross-platform, would be stepping up and getting in on the act, because they would be able to offer fully cross-platform pass key synchronization and, as we know, bitwarden, a Twitter network sponsor, has done so and, given competitive pressures, it will soon become incumbent upon any and all other password managers to offer pass key support, and we'll be talking about what that means when we get to this week's main topic. But for now, for anyone who's using KeyPassXC and didn't know, it's now got pass key support. So that's all for the good.

A user who's actual Twitter? Exactly? His Twitter handle, he later tells us. He tells us was created by asking Bitwarden for a random jump gibberish string. He said Episode 964. He said I'm on the go and won't have time to write an eloquently worded message like the ones you read on the show. Actually, I think his was, but okay, so feel free to simply summarize this if you find it relevant. In 964, last week you mentioned feedback from a listener who mentioned that the Taco Bell app using email passwords for convenience.

Before my main comment, I'd like to mention that they're not the only ones doing this. I suspect the most popular service out there today that does this is Substack, the blogging service that has exploded in popularity since early-slash-mid COVID. When I signed up there for the first time, I wasn't aware, and the whole process was very confusing and counterintuitive, given my previously learned user name password behavior. Beyond being confusing, it truly is much more of a pain for those of us who use browser-based password managers, which have made the traditional login process rather seamless. So I'll just interrupt to say it right and you probably haven't heard me say this, micah the more we explore the topic. Given that passwords are entirely optional when every site includes an I forgot my password recovery link, I think that viewing passwords as login accelerators, which are handled now by our password managers, is quite apropos.

1:32:15 - Mikah Sargent

I like that. Yeah, that's a good way to think of it Because, yeah, I that's really Anecdotally speaking. I know many people who are not as techy as we are whose password really is that I forgot my password button. Every time that's the password, because they forget it right after they've changed it and then they go to their email. So, in a way, because I have always hated this, I try to log in and they send me what they call a magic link to my email. Slack has this functionality. They kind of suggest you use that first and foremost whenever you log back into Slack and mentioned here sub-stack. There's so many that do this and I don't like it. But when I think about now, anecdotally, the people I know in my life who are already doing that go into their email every time to reset their password again. Yeah, password or a login accelerator, that's clever.

1:33:05 - Steve Gibson

That's really what it is, and in fact, we began this dialogue several weeks back. We did a podcast titled Unintended Consequences, and what became clear was that, with Google's deprecation of third-party tracking cookies by the midpoint of this year, the advertisers are freaked out that they're going to lose the ability to aggregate information about their visitors, so they're putting pressure on websites to add a give us your email to join the site. And so the unintended consequence of third-party tracking being blocked robustly in Chrome, as it's going to be, is that websites are being incentivized to ask their visitors not necessarily to create an account, but to quote join, unquote and so they're bringing up a request for your email address, then sending you a link which you have to click on so you just can't use gibberish in order to continue using the site. So, essentially, this is going to get worse, and when we just go to a site, if your browser doesn't already have a persistent cookie for the site, then the site will ask you for an email address just to get it, because they want to be able to provide that email address to the advertisers who are on the site, because the advertisers will pay more money to the site if they receive an email address which has been confirmed in return. So we're entering a world of pain here. But so that brought us to the whole idea of using email in lieu of logon and what that means, and so that's what this guy's talking about. So he says anyway, my main comment is that it may very well be possible that these implementations are not for user convenience, meaning implementations of logon with email. He says they are for liability, he says by requiring an email to log in, the host company hoists account breach liability off of their own shoulders onto the email providers. Your account got hacked, that's a Gmail breach, not our problem, he says.

On a side note, you also mentioned in the episode a listener in the financial sector who commented on password length, complexity and rules being limited by old legacy mainframes. I just wanted to share that. I worked for a federal agency about a decade ago and thought I discovered a bug when I realized I had just been led into my sole enterprise account, despite missing a character on the end of my password. I tested that again and discovered the system was only checking the first eight characters of the password I thought I was using. I grabbed a fellow developer to show them, thinking I'd blown the lid off some big bug and he shrugged and said yeah, it only checks the first eight. He said I was stunned. Thanks for the wonderful content. Cheers, he says PS. Yes, this account username was generated by my Bitward and password generator.

1:36:46 - Mikah Sargent

Got it.

1:36:46 - Steve Gibson

Okay. So we first took up the subject of email only login with our unintended consequences episode, since it was becoming clear that websites were planning to react to the loss of third party tracking with the establishment of first party identity relationships with their visitors and, in every instance, the company's so called privacy policy clearly stated that any and all information provided by their visitors, including their name and their email address, not only could be, but would be, shared with their business partners, which we know are the advertising networks providing the advertising for their site. But I thought this listener's idea about limiting liability was interesting and it had not occurred to me. When you think about it, what's the benefit to a website of holding onto the hashes of all of its users passwords? The only benefit to the site is that passwords allow its users to log in more quickly and easily when and if they're using a password manager. The problem is, the site cannot know whether the user is using a password manager. How big an issue is that today, only one out of every three people 34% are using a password manager, and while this is a big improvement over just two years ago, in 2022, when the figure was one in five or 21%, this means that today, two out of every three internet users are not using password management and we know this suggests exactly as you were saying, micah of your friends, that the quality of those two thirds of all passwords may not be very high. They may no longer be using monkey one, two, three, but their chosen passwords are likely not much better.