Tech News Weekly 308 Transcript

0:00:00 - Mikah Sargent

Coming up on Tech News Weekly. We start by talking to Solana Larson of the Mozilla Foundation about the human beings, the data workers behind our modern AI systems. That's right.

0:00:12 - Jason Howell

And then I speak with Dave Carp, who talks about his rebuttal from his sub-stack to Mark Andreessen's tech-optimist manifesto. Everyone has some thoughts about this, and you don't want to miss Dave's thoughts, it's fantasic.

0:00:23 - Mikah Sargent

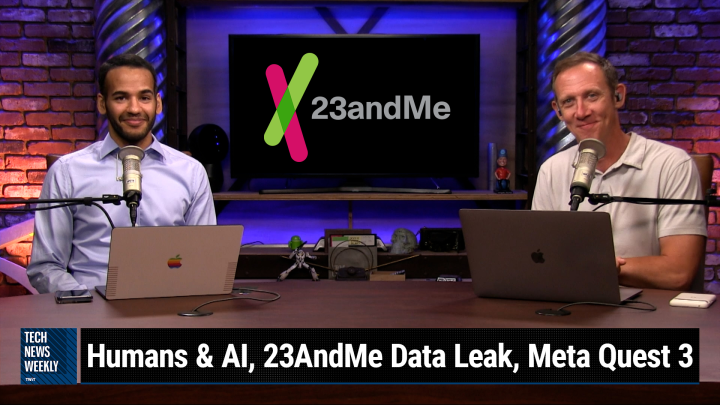

And 23andMe says yes, some data has gotten out there related to DNA, but it was because of credential stuffing, and so it means you may want to change your password and set up two-factor authentication.

0:00:38 - Jason Howell

There you go. It's a pretty important thing to do on all your accounts Go do it now, but not now because we also want you to finish the show. I'm going to be talking a little bit about glass holes, the return of glass holes. This time, though, it's the MetaQuest 3 suddenly arriving in the coffee shop. How are you going to respond when that happens? All that more coming up next on Tech News Weekly. This is Tech News Weekly, Episode 308, recorded Thursday, October 19, 2023, Rebutting Andreessen's manifesto.

0:01:20 - Mikah Sargent

This episode of Tech News Weekly is brought to you by Drata. All too often, security professionals are undergoing the tedious and arduous task of manually collecting evidence. With Drata, companies can complete audits, monitor controls and expand security assurance efforts to scale. Say goodbye to manual evidence collection and hello to automation. All done at Drata speed. Visit drata.com/twit to get a demo and 10% off implementation.

0:01:47 - Jason Howell

And by Melissa. More than 10,000 clients worldwide rely on Melissa for full spectrum data quality and ID verification software. Make sure your customer contact data is up to date. Get started today with 1,000 records cleaned for free at melissa.com/twit

0:02:04 - Mikah Sargent

And by Mylio. Mylio Photos is a smart and powerful system that lets you easily organize, edit and manage years of important documents, photos and videos in an offline library hosted on any device, and it's free. See what has us so excited by visiting mylio.com/TWIT. Hello and welcome to Tech News Weekly, the show where every week, we talk to and about the people making and breaking the tech news. I am one of your Tech News Weekly hosts, Mikah Sargent. I'm the other host, Jason Howell.

0:02:35 - Jason Howell

Hey, micah, hi, hello, there you are.

0:02:39 - Mikah Sargent

Good to see you, sir. Good to see you too. We have a great episode planned, and so, as we always do, you know we always plan a great episode.

0:02:46 - Jason Howell

We're going to to, to our own horn Give us a horn, let's do it.

0:02:51 - Mikah Sargent

So I think we should get underway. I'm really excited because all of you out there are going to be getting kind of a preview of something that is currently not available to the public, because we are going to be talking about a podcast episode and, more importantly, the stories within the podcast episode that's coming out from the Mozilla Foundation's IRL podcast, online Life is Real Life, and this season we're talking all about AI. So I thought it would be a great time to chat with the folks at the Mozilla Foundation about AI, because it's front of mind. So joining us today to talk about the humans that are actually behind a lot of the AI work out there is the editor of the IRL podcast, online Life is Real Life. It's Solana Larson.

Welcome back to the show, thank you, hi. Hello, it's so great to have you on and I appreciate you taking some time to join us and share some some stories from a future episode of the show. So I was hoping that we could just kick things off, kind of laying the foundation, as we try to do by talking about, just in general, how are humans involved in the AI process, because we think AI is this machine and we plug in information, especially with the latest models, chat, gpt and that kind of thing. But to get there it involves a lot of work by humans and in the show it sounds like you focus on a particular group of humans who are working on AI.

0:04:31 - Solana Larsen

Yeah, yeah, thank you, first of all, for that nice introduction and the episode that's coming out.

It's the second episode in a whole season that is about putting people over profit in AI, so we thought it was a pretty good topic to talk about.

Like the literal people who put AI together and often just go unseen and unheard of, and it's the folks who are doing the data work behind the scenes. So a lot of the labeling and content moderation and things that actually help these systems work better or work safer in different ways and, yeah, we don't usually think about them. For instance, for chat GPT, it could be the people who help remove really kind of violent or hateful or very sexual speech from the data sets that are used to train how it interacts with people all over the world. For other types of AI systems, I mean it could even be like if you think of an autopilot of an airplane the plane is able to find the runway because there are humans who have identified a runway to the machine thousands of times and clicked and clicked and clicked, and so a lot of the more advanced system is, the more humans have actually touched it behind the scenes to make it work, I see.

0:05:59 - Mikah Sargent

Okay, so let's talk about, then, these large language models, because we think about them. Some of us are sort of trying to boil them down to this sort of specific understanding that it's, in a way, a very fancy autocorrect system. It is a prediction model that's determining what makes the most sense to say next, word after word or sentence after sentence, and so when we think about that, a lot of times the focus is on the data sets right, that they go onto the Internet and they just grab a bunch of data and then use that to do it, not necessarily thinking about where humans fit into that process behind the scenes. Of course, we're the ones that generated the Internet data in the first place, but as far as what the data workers have to do there, I'd love to hear about what role they play. And then, after we talk about that, I'd like to know too about the AI technologies.

Kind of came before this recent influx of large language models and transformer models. We can think of early computer vision models. Is this a hot dog? Is this not a hot dog? And some of the base autocorrect systems that worked before? I assume that human data workers have played a role in AI for a long time, so maybe you can tell us about that.

0:07:22 - Solana Larsen

Yeah, yeah, that's definitely a safe assumption and I think you know. Part of why it's important to highlight this is because I mean you might think, okay, well, what's bad about a job like that? You just like click when you see a runway, you click when you see a face, or. But there's a sort of hidden element of the uglier side of this type of work. One is that a lot of people are hired to do under very precarious conditions. You know they don't have health insurance, they don't have high rates of pay. Some of the people that we bring into the story are also people who are hired in countries like Kenya, where there are far lower pay rates and where some of the most ugly and secretive parts of the work get outsourced to. So, for instance, we talked to content moderators who were reading day in and out, were reading like very, very sexually violent content, to the point that they now suffer PTSD from the things that they have seen online who were paid less than $2 an hour.

And this was a story that was initially. It was broken in Time Magazine that the workers realized themselves that they were working on chat, gpt and then seeing how much attention this giant you know, billion dollar endeavor is getting worldwide, and how their rights were being trampled and how their needs weren't being met, and so we thought it was an interesting example to pick up on, not because these people are not just because they're victims, because a lot of the time, what we try and focus on is people who are trying to make things better, like how you can actually put people ahead of profit, and what the content moderators in Kenya did. I mean, they took the companies to court, they formed a union and they're trying to advocate for their rights, and they have a lot of proposals for how you can do this type of work, not just the type of work that they did for chat, gpt, but also the type of work they do for Facebook and they do for TikTok and all kinds of other. You know large tech companies that are outsourcing this kind of labor to countries that are often in the developing world. So there would be ways to make it better, but it would require the companies actually listening to the people who are doing the work, who, by the way, are, you know, computer science graduates or people who have been working, you know, in the corporate sector for a long time. Not that it should make any difference.

But I think sometimes, when people hear, oh well, these are people in poor countries, they should just be happy for whatever money they get, I think there's just like a wrong assumption. You know that anybody should be given work that violates their labor rights or human rights. Yeah, so that's part of it, and you know you asked about the earlier generations of AI and there's an element of wanting, I think, in the tech industry in general, wanting to hide this kind of work because it takes away from the magic of how amazing the capabilities of these systems are somehow. But there's also, I think, an element of AI builders themselves not really being aware of it or sort of being shielded from the process by these like platforms that you use. You know, like Amazon, turk, you know you're able to upload tasks to people without ever talking to a human. The way that these systems are designed are to make it really easy to hire thousands of people to do small, menial tasks at scale, you know, and it does enable incredible things to happen. But when you're only interacting via a platform and that experience is like dehumanized, you know, even the people who are making these systems don't get a chance to really think about how does it feel for the person on the other side? Are they getting a fair bargain? And you know it's in all these systems, or even like in the metaverse.

One of the people we talked to in our episode talks about how you know being able to remove. Well, the example she gives. I'll just share it here. She says if you post a picture of a penis in the metaverse, like, how is the system going to know how to remove it? That is because somebody has clicked over and over and over again on different pictures of penises in order to teach the system how to learn it job. So I don't know. It's kind of like for many years, we learn to think about what companies you know. Do they have sweatshops to make our shoes or to make our clothing? And this is the type of awareness that we need to have about the AI systems that we use too. It's not just you know. We can add it to a long list. There's the bias, there's the data, there's the privacy concerns and there's also the human element, which I think gets overlooked far too often.

0:12:32 - Mikah Sargent

Absolutely, and I think you know I wanted to go into more depth and I think you did.

So I'm grateful to talk about that kind of psychological toll because, as you point out, all of that stuff is kind of it happens outside of our knowledge of it or it happens kind of in the background. Maybe you think of somebody sitting at a desk and they've got, you know, an A key here and a B key here and they're looking at photos of dogs and cats and just having to choose which one is the dog and or if there's a dog in the photo. But it can be a lot more than that and you spoke to someone with true PTSD who went through this process. Do you have a kind of more general kind of explanation of how there can be a psychological toll when it comes to this work? Is this for every kind of AI system? Is it for content moderation more often, like, where does that come into play and does it play a role in large language models like chat, gpt or large language models like GPT that you know is behind chat GPT?

0:13:48 - Solana Larsen

Yeah, I mean, I think people will be familiar partly familiar with the hearing about the experience from social media content moderation, which we don't usually think about as AI work. But actually what a lot of content moderators are doing is training AI systems to do automated content moderation. So, you know, images of violence or images of nudity, these are, these are things that humans tag in order for the systems to do the similar things at scale. You know, it's also why we have issues with like over moderation or automated moderation that doesn't work or it doesn't work in all languages Like, but these are really like highly complex systems but they do need humans in the loop in that kind of design process. And I just think when you're exposed to something over and over again without a break in these like very I don't know, they're just they're difficult working conditions because they're also very secretive, so they're not allowed to talk about what kind of work they do. For instance, they're not allowed to tell their families what they do.

One content moderator that I spoke to, who isn't actually in the episode, but somebody who spoke to on background in Germany, described like walking into a warehouse and having to put his phone in a locker, not being able to carry a pencil to his desk because you can't be able to, you know, write messages down or copy anything that you see online. It's just. It's a very tense situation. And then you're exposed to some of the most gruesome things child abuse and people getting killed and even in the case of the chat GBT content moderators you know we were just talking about text and then you think, well, how bad can that be? But when you're reading messages of people describing really horrible, gruesome things and people start wondering, well, is this real? Can I trust anybody, you know, you start to, I guess, doubt humanity or feel really bleak and depressed. You just don't get a break from it.

And so what they're saying is it would help a lot to have psychological support in these roles, and they do get some degree of counseling, but what they're saying is it's definitely not enough and they're, on top of it, not prepared that this is the kind of work that they're going to be doing. A lot of the people who are lured into this work are told that it's customer service jobs or some kind of business administration, and then, when they get there, it's something entirely different. So that's also a very difficult thing, and that's part of the reason why, in Kenya at least, they've taken companies to court In other parts of the world. You know, we'll see. Maybe this is a moment where people are beginning to wake up to this issue.

0:16:48 - Mikah Sargent

Wow, wow. Lastly, the question I have for you is is there anything our listeners should know about how to help these AI data workers improve the working conditions? You talk about work in other countries. Is there some sort of grouping being done to try and improve things for these workers who are doing something that is at the heart of? I mean, that's the most incredible thing about it is so much of the online stuff we experience is affected by algorithms and content moderation, and everything these days has AI, and if you get a new app update, it talks about AI. So, frankly, at the heart of this are the roles that these workers are playing. So what can we do to help?

0:17:46 - Solana Larsen

Yeah, it was just when you were saying that. I was also thinking you know these captures that we all have to click on.

0:17:51 - Mikah Sargent

I mean, we're all kind of data workers these days you know, imagine doing captures all day long, yeah even when they're just trying to find, you know, photos of trains. I couldn't do that all day, every day, and so to imagine that it could be even more bleak than that is awful.

0:18:10 - Solana Larsen

Exactly, exactly. That's the mindset we have to get into. I think a great place to follow is an organization called FoxGlove foxgloveorguk. They've been working really closely with the content moderators in Kenya and they've been following the issue of content moderation for several years and have also helped with litigation on this issue. I think that's a great place to like, grab the newsletter and stay up to speed.

Another organization which represents, I think, also international workers, data workers, but especially in the US, is called Turk Opticon. It's a worker-led organization of people who work on the Amazon Mechanical Turk platform and they often have petitions or different kind of news updates of what they're working on. And both those organizations I mean there's often opportunities to give donations or, you know, to otherwise help out. But definitely listen to the podcast. We explain how all these things work and how it connects, and we have also some voices that show that this can be done differently and have a different like financial models that they're working on. Startups that are thinking differently about how you could renumerate data work differently, because this is a huge business, so it wouldn't necessarily have to mean, you know, that people just get paid nickels and dimes.

0:19:42 - Mikah Sargent

Of course folks can head to irlpodcastorg to check out all of the seasons of the show, but of course the latest season, season seven. If folks want to stay up to date with what you are doing, is there a place they should go to do that?

0:19:59 - Solana Larsen

Well, yes, they should go to mozillafoundationorg and follow Mozilla on Twitter. And yeah, the podcast just follow it. It's in all the different apps for podcasts. It has a long title IRL online life is real life and the episode we talked about today is coming out on Tuesday, so follow now and then you'll get updated on Tuesday.

0:20:21 - Mikah Sargent

Wonderful. Thank you so much for your time today. We appreciate it.

0:20:25 - Solana Larsen

Thanks a lot, bye.

0:20:28 - Mikah Sargent

All righty folks Up. Next it's time for a manifesto, but first let's take a quick break. Let's all breathe to tell you about our first sponsor of Tech News Weekly this week. It's Drata. Is your organization finding it difficult to collect manual evidence and achieve continuous compliance as it continues to grow and scale well as a leader in cloud compliance software by G2. Drata streamlines your SOC2, iso 27001, pci DSS, gdpr, hipaa and other compliance frameworks, providing 24 hour continuous control monitoring so you can focus on scaling securely. With a suite of more than 75 integrations, Drata easily integrates through applications such as AWS, azure, github, octa and Cloudflare.

Countless security professionals from companies, including Lemonade, notion and Bamboo HR, have shared how crucial it has been to have Drata as a trusted partner in the compliance process. You can expand security assurance efforts using the Drata platform, which allows companies to see all of their controls and easily map them to compliance frameworks to gain immediate insight into framework overlap. All customers receive a team of compliance experts, including a designated customer success manager, and Drata's team of former auditors has conducted more than 500 audits. Your Drata team keeps you on track to ensure that there are no surprises or barriers. Plus, Drata's pre-audit calls prepare you for when your audits begin, knowing that you can be prepared. I think that's incredibly important and will kind of ease the process.

Drata's audit hub is the solution to faster, more efficient audits. You can save hours of back and forth communication, never misplace that crucial evidence and share documentation instantly. All interactions and data gathering can occur in Drata between you and your auditor, so you won't have to switch between different tools or correspondence strategies. And with Drata's risk management solution, you can manage end-to-end assessment and treatment workflows flag risks, score them and then decide whether to accept, to mitigate, to transfer or avoid them. Drata maps appropriate controls to risks, simplifying risk management and automating the process. Drata's trust center provides real-time transparency into security and compliance posture, which improves sales, security reviews and better relationships with customers and partners. So say goodbye to manual evidence collection and hello to automated compliance by visiting drata.com/twit, that's d-r-a-t-a.com/t-w-i-t. Bringing automation to compliance at Drata Speed. Thank you, Drata, for sponsoring this week's episode of Tech News Weekly. Jason Howell.

0:23:13 - Jason Howell

Okay. So on Monday this was just a couple of days ago venture capitalist slash billionaire Mark Andreessen. He published a manifesto which I know you've heard of at this point the techno-optimist manifesto. People were not very happy about this manifesto and in fact, many took to the internet to share their thoughts and our guest, thankfully shared with me through Jeff Jarvis, took to Blue Sky and said on Blue Sky that he wanted to join the tech podcast to share his views on this manifesto. So I invited Dave Karp, who is the internet politics professor at George Washington University and also the author of an upcoming book on reading every issue of Wired, to come on the show and talk all about it. It's good to have you here, dave. Thank you for joining us.

0:24:03 - Dave Karpf

Thanks for having me. I appreciate you answering the call.

0:24:06 - Jason Howell

Am I the only one that answered the call? I got to know you have to get a response when you say that I imagine.

0:24:13 - Dave Karpf

This is one of the problems with Twitter not being Twitter anymore. Yeah Is I had a large Twitter following and so I used to get invited on a lot of podcasts, and now I've given up on it. I'm not doing X With the lack of that. Yeah, I put out the call. I heard nothing. I wrote a very snarky sub-stack post about this, which is mostly what I'm going to say but yeah, this is the one answer, so thanks so much.

0:24:36 - Mikah Sargent

Well, thank you for being here.

0:24:37 - Jason Howell

I'm happy to be able to allow you to deliver on your call. So you mentioned your sub-stack that you wrote, titled why Can't Our Tech Billionaires Learn Anything New? I guess we should start there. What is the legacy that tech billionaires can't let go of?

0:24:54 - Dave Karpf

The thing I want to note here is my day job for the past five years has been reading old wired magazines and thinking about them, which is a weird day job. It's the thing that you do as a professor after you have tenure. But he's actually done me a weird favor here, because one of the challenges that I have in doing this writing is convincing people that we should care about the stuff that Louis Rosetto, who was the founding editor of Wired Magazine, like the stuff he was saying in 1994. And I can go through that with a fine-tooth comb and talk about how their image this sort of libertarian, techno-optimist prediction of how the internet's about to change the world that they were making in 1994. I can go through the fine-tooth comb and talk about how things didn't work out that way. They were kind of wrong and we should learn from it.

But there's always that hidden question of like okay, dave, you're obsessed with this, but why should we care about 1994? George Gilder was wrong about a lot of things in 1994. Get over it, dave. Now Mark Andreessen has come along and given me this great answer of the reason we should care about the things that were wrong about in 1994. Is that the tech billionaires today still believe it was exactly right and also somehow think it's new. The image that stood out to me is um, have you noticed? I keep on seeing like preteens wearing Nirvana t-shirts.

0:26:17 - Jason Howell

Yeah, oh yeah. I have a preteen slash teen who has a Nirvana sweatshirt and, totally just as a related aside, when she got it I asked her like, uh, are you a fan of Nirvana? And she's like, what's Nirvana? And I was like, oh my God, I have. I've failed you as a father.

0:26:33 - Dave Karpf

She now likes Nirvana, but anyways that's what I said but let's be clear, you had failed as a father, though I'm glad you've passed that, yeah, but yeah. So like, like everybody wearing the Nirvana shirts, the Frasier reboot, like it. It really feels like they've just decided it's 1993 again. Sure, and that's standing out to me is this techno-optimist manifesto that Andreessen decides to publish on Monday, 20 minutes before I taught class, which, by the way, is personally rude to me, like, come on man, very abstract, yeah, but this manifesto just reads exactly like the stuff that they were saying back before we had a worldwide web, back when the Cold War had just ended, and it looks like we're going to have globalized capitalism and everything is going to go amazingly well. All the promises you're making there are the exact promises they were making 30 years ago. And what stands out to me is, when I'm reading those promises for my day job, I always keep in mind that they kind of didn't know better back then.

Right, it was the end of the Cold War, it was the beginning of the commercial web, and so those early 90s commentaries are filled with this hope of like you know what? Everything is going to go exactly the way the economic theorists say it will. We don't need to worry about inequality because capitalism will just take care of all of it. If we just don't tax the billionaires, certainly everyone will be better off. I mentioned this in the piece, but one of the toughest parts about reading old-wired magazines is the occasional moments when they'll mention how much houses cost, like we didn't worry about inequality for 30 years and inequality got really bad. This sense of all we need is optimism, which was a big part of 90s tech utopianism the sense of if we just think good thoughts, if we just clap for the innovators and the entrepreneurs and the investors of Silicon Valley, then the world will be our oyster. Things will just open up.

That optimism has aged really poorly as technology has gone from, or as the internet has gone from just being the realm of the future to being the present, as big tech has swallowed the world. Or to use an earlier American-Dresden statement, he used to say that software had eaten the world Like once software had eaten the world. We're, then, looking at these companies and not just judging them based on their intentions like their ambitions but we started to judge them instead based on their actual results, like they're the biggest companies in the world. So when the world is a mess. We kind of look at them and say, okay, how are you responsible for this?

And what I've been noticing for the past couple of years is how a lot of the big tech barons both during the tech lash and after the tech lash, just really don't like that negative attention. It really pisses them off. That, I think, is what this manifesto fundamentally is. It's just one of the richest people in the world, having been at sort of the center of things for 30 years, being mad that people aren't clapping the way we used to.

I think that deserves to be made fun of.

0:29:25 - Jason Howell

I just have to just call out my respect for you in this moment because you are like what you're undergoing in reading all of these previous issues of wired and then this moment that we are in like, it seems like the perfect kind of like Oreo cookie to go together because it gives you a really unique perspective to be able to look the past 30 years and I do remember the 90s from the perspective of you know, as a fan of technology. You know I was in my teens and early 20s. I always loved technology and when the internet came along, I did have big hopes and ideas and thoughts about, like, what is the world going to be in 20 years if this is the new reality? Everything is roses. And then I have grown into my adulthood and through my adulthood, witnessing what happens when we give a little too much faith in just letting technology take care of it or assuming that the good will come out in the end, because we've never seen this kind of ability to rapidly scale and, you know, impact so many different lives.

And we've seen some good but we've also seen a lot of bad, and I think that's what really struck me in reading through the manifesto is and I, and maybe this is the purpose of a manifesto. I don't know, I've never written a manifesto, but is it the job of a manifesto to call out the negative side of what one is saying? Because if so, andreessen did a poor job of that. In fact, he didn't really call out the negative of his perspective at all. He essentially says if you think negatively about technology, you are wrong, you are short-sighted, you are standing in the way of progress and people will die.

0:31:09 - Dave Karpf

Right, yeah, and along with that, I think the most interesting and revealing part of the manifesto is it's one of the later sections where he lists finally who the enemy is. Yes, this is important because he starts by talking about lies and truth. He says we are being lied to and told that we should think negative thoughts about technology. And again it's like, man, you are a billionaire, you hang out with people who own media companies and people who have sued media companies out of existence, like who is doing the lying buddy. So he gets to who the enemy is and he's got a long list of them, but ones that stand out are trust and safety, tech habits and risk management. Oh my God.

0:31:50 - Jason Howell

What horrible things.

0:31:52 - Dave Karpf

Yeah, also, let's keep in mind this guy was on the board of Facebook for a long time, so when he says trust and safety is the enemy, the trust and safety department of Facebook are not the enemy.

They are not tech pessimists who are telling people that they should hate the internet or like hate growth. Those are people who are saying, oh, as we have connected the world, it turns out that that has some downsides too, and we try to manage it. The other bit that I talked about in my response piece, which I've written about before, sort of the difference between tech optimism, tech pessimism and tech pragmatism I think there's a false dichotomy of you're either optimistic about the future or you're pessimistic. You're for us or you're against us. Right, and like, whether I'm optimistic or pessimistic really depends on the circumstances and the day. Yeah for sure, amens. But what I absolutely am is a tech pragmatist, and a pragmatist is somebody who says our job is not just to clap or to boo, but to actually worry about things that might go wrong and then make plans for how to fix them. But I think he really gives the game away when he lists as his enemies the people who work at these giant companies, who are involved in things like worrying about tech, ethics and risk management and trust and safety, like the trust and safety people.

Those are the people at Facebook and a Twitter. Well, they were all fired at Twitter, but when they were still there, they were the people who were saying oh well, so when you connect the world, it turns out that a bunch of white nationalists can weaponize that. It also turns out that people who want to share child sexual abuse material they're going to use that too, and as we become giant platforms, we're going to need to manage those problems. The idea that he looks at that and says you know what? That's terrible.

Those are the enemies. Like Mark Andreessen, how are those possibly the enemies? How is it that they're the problem that is getting in the way of, like, enough people clapping for you? And it really just suggests to me that the thing that is bumming him out, the thing that's leading him to write this right now, is that people have started to ask pragmatic questions about AI, about the platforms, about, essentially, a16z's investment portfolio, and people like Mark Andreessen don't have good answers to that. They want to be judged based on their ambitions, not based on the results, and that was fine in the 1990s when tech was still small, but it's just too big now for us to treat them with those sorts of aspirational gloves anymore.

0:34:20 - Jason Howell

Yeah, too big, keeps getting bigger, and I mean he's one of the billionaires that stands in the receiving end of profiting off of that getting bigger and bigger. It also struck me as pretty hypocritical to be saying something like trust and safety is an enemy, because trust and safety, just as one example, exists to protect people and to protect people from harm or death or any number of really bad things. And yet his manifesto is kind of talking, you know, he throws this idea of regulation around, ai being quote a form of murder. So on one hand, you know, and I guess at the end of the day, it really goes back to this long termism thing, right? I mean, was Mark Andreessen already kind of assumed or understood to be kind of I don't know a thought of someone who thinks in terms of long termism? Because this manifesto really kind of made me believe that that's kind of where this is all coming from.

0:35:22 - Dave Karpf

So I hadn't. He hadn't been on my radar as somebody who'd talked about long term termism before I've written about this a bit also on on Substack he hadn't been on my radar, though it doesn't surprise me that he's quoting this stuff again, because long termism ends up being a really good excuse for not asking powerful people with grand ambitions Indeed, to make hard choices today. Yep, like that's the reason why Elon Musk likes it so much. Like, if you believe yourself to be the central actor, the star actor of history today, then long-termism very easily lets you say you know what I'm building a better tomorrow. And like people thousands of years from now will still remember my name. That means I'm great and I'm good and I don't need to worry about how I treat my workers. Or you know whether or not, as we try to get AI to drive cars, I think a bunch of more people die in car accidents. Like it's easy to dismiss that. And I also want to say like I don't know that Mark Andreessen's are real long-termists or not Like there are philosophers who are actually long-termists. I disagree with them, but I take them seriously. He once bought.

In the essay he quotes Brad DeLong, who wrote a book named Slouching Towards Utopia, which is a fantastic book, and he name checks it. And also Brad DeLong is a serious economist who has criticisms of unregulated capitalism, like capitalism that has no role for regulation. The way he quotes DeLong leaves me really thinking that there's no way that Mark Andreessen actually read that book, like I actually read that book. It's a long book. It's an excellent book. Andreessen didn't read that book. I don't think he actually has read up on long-termism. I think he heard it. Maybe he saw it on a discussion board and he said, yeah, that sounds good, let's put it in a manifesto Like this thing is sloppy thinking. It deserves you to make fun of. It doesn't deserve to be taken seriously.

0:37:09 - Jason Howell

Who knows, maybe he used ChatGPT to summarize it and he was like, okay, I'll go with that.

0:37:13 - Dave Karpf

Yes, ChatGPT writes better. Like. One of my standard things about ChatGPT is it's a cliche. And Jen like it writes fine, it doesn't write great. Yeah, chatgpt writes better than Mark Andreessen and that he should be embarrassed Like the man is. I think I already has $1.7 billion. He can afford an editor. Why didn't he get one?

0:37:32 - Jason Howell

Yeah, Well, that was another. I think a lot of people have felt that way too. It's just like it goes on and on Like this could have been worked. You could have workshopped this a little bit more and got it trimmed down to the essentials. How much of this do you think is just like? When I think of and maybe this is a pessimistic view but when I think of people in a powerful position such as this putting out something like this, that is obviously very polarizing because people either totally agreed with it or are coming out very heavily against it, and I can't help but think like how much of this is really just an effort to get under the skin of the people who I don't agree with or don't agree with me? What do you think?

0:38:13 - Dave Karpf

about that, maybe a little bit, but I actually think that there's something bigger going on here, or there's another element of to it to it, which is he is signaling what people like him or are supposed to agree with, and that wouldn't matter if you like some random blogger on the internet like me, I'll run a blog on tenure, but, like random guy, it matters a lot because the network of people in Silicon Valley who are big in venture capital is very small and very well connected.

I mean, they basically killed Silicon Valley Bank last spring through, like everyone freaking out in a single WhatsApp discussion group, a hundred guys now control enough capital that they could ruin a bank that they otherwise liked, and so that's the challenge is, I don't think he's trying to get under his opponent's skin as much as he's trying to signal to everyone who wants to operate in his world that these are the things you're supposed to believe, these are the things that you're supposed to, kind of genuflect name, genuflect to a name check, and that's the part that's really.

That's really dangerous is, I can make fun of this, but I'm also not trying to get a series A funding. If you are trying to get into like Y Combinator, you're going to need to show that you're a tech optimist, right, because they all know each other, and so, in that sense, while this is a sloppy document full of references to things that he definitely didn't read, it's also now full of references that everybody else is going to quote and pretend like they read too, and there is so much power and money in Silicon Valley that we should care about. We can't just dismiss this out of hand. We should take this seriously, because this is now where everyone is going to need to go if they want funding.

0:39:55 - Jason Howell

Indeed Great, great way to kind of wrap that up. Before I let you go, tell us a little bit about the book you're working on. I'm super fascinated by the fact that you're reading all of these past. You know past versions, or you know what magazines have wired and making a book about it. Tell us a little bit about that Sure.

0:40:16 - Dave Karpf

So this started. I actually read all of Wired Magazine as a speed read in 2018. I wrote about it as a feature article for their 25th anniversary issue. That was October 2018. So if you want the short version, they can read that article. It's online at Wired. But that got me interested because there was enough there that I said you know, I need to do a longer, slower read through all of this to trace sort of these ideas of the digital futures past. Like what did the future, the digital future, look like as it has been arriving over the past at the time 25, now 30 years. I've been mostly writing that up on my substack, so davecarpsubstackcom is the other place where people can see a lot of essays of this as they come together. And then this spring I have a sabbatical from the university, so that's when I'll actually be turning into a book project.

0:41:01 - Jason Howell

All right, we'll have fun on that sabbatical and we'll be watching what you post on your substack. And, dave, really thank you for putting the call out. Thank you to Jeff Jarvis for connecting the two of us. It's been a pleasure having you on and getting your perspective on this. Really appreciate it. Thank you, dave. We'll talk to you soon. All right, up next, is your DNA safe, micah? We'll find out.

If 23andMe is any indication, it might not be so much that's coming up, but first this episode of Tech News Weekly is brought to you by Melissa, the data quality experts. For 38 years, melissa has helped companies harness the value of their customer data to drive insight, to maintain data quality and support global intelligence. All data goes bad, by the way. Up to 25% per year, in fact. Having clean and verified data helps customers to have a smooth, error-free purchase experience. Bad data is bad business, yes, and it costs an average of $9 million every year. Flexible to fit into any business model, melissa actually verifies addresses for more than 240 countries to ensure only valid billing and shipping addresses enter your system. Melissa's international address validation cleans and corrects street addresses worldwide Chinese, japanese, cyrillic Just a few of the writing systems that Melissa's global address verification actually supports Addresses automatically transliterate from one system to another, which is just amazing. You can focus your spending where it matters most, and that is Melissa, of course, offering free trials and sample codes, flexible pricing, roi guaranteed and unlimited technical support to customers all around the world.

You can download their free Melissa Lookups app from Google Play or the Apple app store, no signup required. There you can validate and address and personal identity in the US or Canada. You can check global phone numbers to find caller carrier and geographical information, and you can check global IP address information and so much more. Once you're signed up with Melissa, it's super easy to integrate their other services. They have some really valuable services to check out Melissa identity verification, which increases compliance. It reduces fraud, improves your onboarding. There's Melissa Enrich, which gains insight into who and where your customers are, and so many other things. You just got to go to the site to check it out.

Melissa specializes in global intelligence solutions, undergoes independent third-party security audits. They are SOC2, hipaa and GDPR compliant, so you know your data is in the best hands. Make sure your customer contact data is up to date. Get started today with 1,000 records cleaned for free and you just go to melissa.com/twit. Make sure you use that URL. That's what tells them that you heard about it through us. That's melissa.com/twit. We thank Melissa for their support of Tech News Weekly. All right 23andMe, which I have never used personally, but maybe for this reason, maybe.

0:44:16 - Mikah Sargent

Yeah, as you may have heard you may not have heard that 23andMe data was stolen and given to hacker forums and sort of dispersed on the internet. It's important to understand that, as far as we know, as far as 23andMe says they know, and as far as everything suggests, 23andme was not breached, meaning that it was not some sort of hack of the company's servers or that they gained access to the internal network or anything like that, but instead this was your classic credential stuffing attack. For folks who don't know, that means that at some point, people's usernames and passwords from other sites were stolen and put onto the web. And then someone comes along and they stuff the credentials. They put username and password in over and over and over again to try to find one that lets them log in, because people are still not listening to us about using a password manager. Do it, do it. Then it results in people being able to gain access to certain accounts. Here is where the problem comes in.

23andme has a feature that I would now call a bug called DNA relatives. What this is is that the stories that you've heard about how somebody gets their DNA tested and then they find out that they've got an uncle that they didn't know they had, or a cousin or maybe even a parent that they didn't know was, or they didn't know where they were. Whatever happens to be, it's essentially an opt-in thing where I say I want for my relatives who have 23andMe accounts, who are within a certain range of relation to me, to be able to see more about me as well. Let's say, I have a first cousin and they have a 23andMe account. I have a 23andMe account and we both did our DNA profiles and that's how we were matched as first cousins. My first cousin could go to my profile and learn a little bit about my background.

What was happening is, by gaining access to accounts through credential stuffing and then digging deeper through that DNA relatives feature, they were able to get even more information. Stuck out with the initial publishing of data is that it seemed to be targeted against Ashkenazi Jews. It was specifically DNA information. I should say not even DNA information, but information related data related to this group. A million data points is how wired puts it. The data includes your name, birth year, your sex and then ancestry results that are pretty broad. It would say things like broadly European or broadly Arabian. It's important to understand that, while this did happen through credential stuffing, it was not such that suddenly someone has the entire DNA makeup of an individual or of any individual.

0:48:04 - Jason Howell

I feel like if that had happened, it would be over.

0:48:08 - Mikah Sargent

Yes, that would have been awful. You would have definitely heard about it. It would have been much worse At the most. They've got kind of like the things that we can almost just see when we look at someone. I think you're pretty European. You probably think I've got some African. That's because I do.

It's not as maybe terrifying as it could sound on the tin when you first look at it. What's of more importance, potentially, is the fact that the hacker that claims to be the same hacker is continuing to release more data. So they did get quite a bit of information from through this credential stuffing attack and have released even more information. Now. The hacker, who goes by Golem, does claim to have data from quote the wealthiest people living in the US and Western Europe and even, I believe, claimed some specific celebrities were part of the data. Whether that's true or not has not been able to be confirmed whether this data is actually you know, I could say that I have, you know, someone's information from 23andMe, but whether you can confirm that that is actually the data that belongs to that person or not.

0:49:26 - Jason Howell

Right, yeah, because they call out Zuckerberg, Musk, Sergey Brin in the sample data that was provided. But yeah, we don't know and I don't think it would be in the best interest for illegitimate Zuckerberg to confirm that.

0:49:40 - Mikah Sargent

oh yeah, I did.

0:49:41 - Jason Howell

Oh yeah, that is my idea.

0:49:42 - Mikah Sargent

That's probably mine. Probably not gonna happen, yeah, no. So what this boils down to is that 23andMe did reach out to customers and said hey, it's a good idea to change your password and turn on two-factor authentication. That would have prevented this from happening. You can also one of the things that you have the option to do within 23andMe is choose whether 23andMe continues to hold on to your DNA profile for further testing that might take place down the line, for improvements to the technology and for further reports. You don't have to grant that and you can, in fact, go in and un-grant that at any time if you want to.

If you do have a concern about it being breached in more depth, I suppose is the best way to put that and have that data actually stolen. But honestly, I feel pretty good about the fact that the only way thus far, a hacker or a bad actor has been able to gain access to 23andMe because you know that there are so many attempts happening For sure, and the only way that that's been able to happen is through credential stuffing, which is just one of the most brute force methods of doing it. And yeah, if you have people whose password is 123, I eat pie, I don't know.

0:51:17 - Jason Howell

Yeah, you're guaranteed to eventually find some sort of something that you're doing.

0:51:21 - Mikah Sargent

Yeah, get access to that, and it's only because you had that DNA relative thing turned on that they were able to get even a little bit more information about you. But they don't have, as far as my understanding and from the reports, the detailed percentages of the different data. It's just kind of the larger subsets. So ultimately, as is, excuse me, always our recommendation use two factor authentication. Go turn that on right now. If you do have a 23andMe account and you don't have that turned on, consider changing your password just to be safe, and use a password manager, including our sponsor, bitwarden, which offers free password management to individuals. So might as well check it out and keep yourself safe if you have chosen to use one of these services.

0:52:12 - Jason Howell

Yeah, I think the thing that comes up for me regarding two factor is a general good idea is that sites that didn't use to support 2FA, eventually some of them do, and you as a user might have done the 2FA sweep before, but if you haven't checked lately, you might discover that more of the sites that you rely on actually support it now, especially now because it's really become a big tool for prevention. So it's worth checking in on from time to time to be sure that you've got all of your major accounts are protected with 2FA. If it wasn't offered before, it's possible, it is now and it's worth looking into.

0:52:50 - Mikah Sargent

And there are several sites online that have like a catalog or database of what sites offer two factor authentication, and many a password manager has that built in. Where it finds out that two factor authentication has been added to a site, it'll say, hey, you can go and turn this on now, get that, get that logged in. So do it, yeah, do it, and keep your, keep your data safe.

0:53:13 - Jason Howell

Keep your data.

0:53:13 - Mikah Sargent

slash DNA yes especially that I mean, yeah, that is a form of data right. That is human data.

Absolutely Alrighty, folks, we will come back with the latest meme, the next potential to destroy a thing we all could come to love. You'll see. But we do want to take a quick break to tell you about our third sponsor. Today, it's Mylio who are bringing you this episode of Tech News Weekly.

I know you've heard how much we've come to love Mylio photos. It is the solution to digital management for photos, for videos and documents, and it's free, and their latest round of updates are giving users even more to be excited about. See, Mylio has refined its search and grouping tools, offering incredible cataloging and access for your important files. My Leo photos automatically assigns AI smart tags to your files and, with their updates to dynamic search and quick filters, you can find specific photos incredibly fast. It's really handy to, again, on device, have this AI smart tagging taking place and then drill down into my photos to find that exact photo. I was looking for A photo with dogs in it, a photo where a person is smiling, a photo at the beach. Ah, suddenly there's the photo of my mom and my dogs at the beach smiling, looking like they're having a great time. I knew that photo was there, but I couldn't find it using other photo management systems. My Leo made that so simple to do and now, on top of that, you can work with others on specific photos thanks to the new Spaces tool. With the Spaces tool you can easily determine what's visible and what's not, opening the door for more engaging collaborations at work or even just sharing with the family. So you can create a specific space and say I want all the photos that we took at the beach to be included in this space and then my family can view it. I can even give access so you can work on the photos. Or maybe you have a product marketing thing that you're doing so you can share with the product marketing team. You create custom categories into a quick collection. It's easy to share so that I and other Mylio Photos users can collaborate on editing, managing and sharing that media in any given space. And it's great that you can keep spaces private, either with passwords or pins, so that there's that extra security when sharing those spaces. So they get access to the space they've got the pin they type in and then they can see those photos or those documents.

I love personally that I can use Mylio across all of my devices and be able to have that synced from my Mac Studio. That that's kind of the source of truth and being able to plug in different sources I think was the big thing about Mylio. That made it Mylio Photos. That made it so cool is that I've posted photos to Instagram over the years. I've uploaded photos to different online services and being able to pull all of those and have them locally and know that those photos are mine and that I can browse through them is so great.

With the Mylio Photos Plus subscription, all of my devices are connected in that one library I was just talking about. There's no cloud storage required. So with that offline storage I don't need to rely on the cloud to keep files accessible through devices. But I can also create new backup systems and know they're secure. So download Mylio today for free and see what has us so excited.

Get Mylio Photos on your computer or your mobile device it's on all of it by going to the special URL mylio.com/twit, that's mylio.com/twit. Download Mylio Photos for free right now at mylio.com/twit. I know you all can open the Google Play Store or the App Store and type in Mylio Photos, but I'm asking you to please go to that URL. I promise you'll get to that app link very quickly, but by going there first that's how they know that we sent you. So please go that way to find Mylio and we thank you for your support and we thank Mylio for their support of this week's episode. Okay, jason Howell is someone in a shower wearing a headset.

0:57:31 - Jason Howell

Oh God, I hope not, but probably. I mean, this is a large planet, there's a lot of people on it.

0:57:36 - Mikah Sargent

There's gotta be at least one person doing that.

0:57:38 - Jason Howell

Somewhere right now there's a person. Everything is possible. I'm pretty certain. If it hasn't happened already, it will soon. But that's not what we're gonna talk about, because that could get weird. But we are tangentially related to that because the MetaQuest 3, it started arriving to people last weekend. So this is Meta's latest standalone VR headset with all sorts of cameras built into the visor. They actually made some really important improvements to the hardware this time around, full color pass-through camera being the thing that we're gonna talk about today. So on the previous MetaQuest, you had a pass-through camera, but it was black and white, a little latent, not nearly like reality. It's not like you're looking through the cameras to the lens of reality. It was more meant to just like give you an idea of where things were when you wanted to draw your border. What did they call that border? Oh, the boundary. Yeah, something boundary. Why can't I remember? Because it's been a while since I've done VR at this point, mine sits in its drawer.

But it's always waiting. It's there, ready when you're ready, and I'm never ready and the battery's probably depleted at this point, but anyway, so the pass-through wasn't perfect. It's definitely not perfect in the sense of what we think about when we think of, like, future technology, augmented reality. You know smart glasses, sort of thing not what we think about when we think of apples. You know a headset, what that's going to enable? Well, the Meta 3, or the Meta Quest 3, has that improved system. So you've got full color, low latency. It's really designed to be the first Meta Quest headset that could be used for true augmented reality situations. And then you, you know, of course, you follow that along and there will always be the people that think, well, why don't we push this a little further than what they intended? And, as you know, it's battery powered so you can go anywhere with it.

So Sean Hollister over at the Verge wrote about the fact that, not too long after these started hitting people's doorsteps and being delivered to them, did people start throwing on their Meta Quest 3s and going out into the real world wearing them. And I mean there's tons of video that has been shared online. Sean kind of wraps up a few of them, and this is, you know, this is one video of a person this is the pass-through camera being recorded within the Meta Quest 3, walking into a coffee shop holding on to a virtual web browser. I guess there's a video of him from a third person, like what he looked like, going into the coffee shop and essentially you know, ordering whatever he's ordering from the barista, and doing it all while wearing a Meta Quest 3 on his head.

1:00:37 - Mikah Sargent

I just I just bless these human beings who work at, who do any kind of service, who have to pretend like nothing is weird. Yeah, you know, and I'm not even just talking about wearing a headset, but just you think about the folks that you could just walk up in, I don't know a big bird outfit, but the mask off and they would just be like how would you like your coffee today?

1:01:03 - Jason Howell

You are just another person. Yes, I'm here to get you a coffee. You know, this is a person. I guess what in there?

1:01:10 - Mikah Sargent

Now, that's cool, though I have to say that is that that stayed where it was. That's not easy to do, yeah, and you just the needs a proper anchor.

1:01:18 - Jason Howell

Look at that. Yeah, it's pretty neat. And actually he goes out onto his balcony. Do I need a Meta Quest 3? I know, right, goes out onto his balcony. At this point I'd be sweating bullets and be like I don't know. I'm next to a ledge Like this. Is my TV going to fall off? Am I going to fall off? Like oh, I misjudged the distance and I see, thankfully that didn't happen in the video Sweaty palm. Yeah, I mean, I don't know that I feel comfortable getting that close to a ledge wearing a Meta Quest 3 on pass through. And then there's some footage from I think this was a Comic Con, was it New York Comic Con? Walking through, and there's tons of videos here where this person was wearing the Meta Quest 3. And you know, I guess, I guess at this stage.

Yeah, there are some little jitters, because when you're talking about you know these things like, like the, the browser and being able to pin it to a certain spot. The hardware needs to be able to anchor to something in order to know what's what's real, what the perspective shift is on that, when you move your head and you're not real man. And I imagine in big populated places those anchors can get pretty confusing but at the same time it looks like it's doing a pretty decent job.

1:02:26 - Mikah Sargent

It's impressive, but yeah, the people walking in their shoes and and all of that Like that stuff can all probably confuse it.

1:02:33 - Jason Howell

This person is going into an elevator, just continuing to watch the film you kind of see the, the reflection in the mirror and the elevator While watching a movie on a virtual screen. No big deal, that is wild NBD. So you know and Sean, of course, you know his headline the Meta Glass holes have arrived. I was an early adopter of Google Glass. Google invited me to the Google campus and allowed me to get fitted and actually, you know, leo, leo purchased the glass and so I was invited with that first kind of round of people with Google Glass and got to go there and put it on.

And I remember getting in the car wearing the glass, driving back to Petaluma from Mountain View and just think, you know, just kind of having it's different, right, it's not like a full pass through or anything, but having that little kind of navigation pill up in the corner of my head and just being like, well, that's cool, I don't have to like look at a screen or anything like that, it's just always there. This is, this is the future, you know. But that was a while ago. There was swift response, swift counteraction against the quote glass holes, coming into public places wearing these things, people not knowing. Are you recording Right? Are you streaming? That feels like an invasion of privacy and are we in a different place now.

1:03:52 - Mikah Sargent

I think that we are at a different place now. I think that, even more than then, we everybody's got their phone out and yeah, you, I'm. Perhaps there's a better understanding of the, the rule of law in general, which is, if you're in a public place, that you don't have that protection of privacy. No, and I don't know, maybe just because we all, even now, have more connection to other people and think about even little things like TikTok that are so big with so many people, you just see people being weird and doing weird things.

1:04:28 - Jason Howell

That's true, you see that more now. Yeah, where it's like oh, they're recording a TikTok, obviously, like there's a performance happening there for a little camera that's right over there.

1:04:37 - Mikah Sargent

And so we're all just, I think, more used to people being bizarre or something, if that makes sense.

1:04:42 - Jason Howell

We also live in an area where that is highly normalized. That is true, I would imagine yeah, in Montana somewhere.

1:04:49 - Mikah Sargent

If I walked around with that, they might be a little weird out.

1:04:52 - Jason Howell

I'm guessing there would be Swift response or reaction. Also, what about the sheer size of the device? Like when we think of the Google Glass? Like yeah, it looked weird, but it was still very small.

1:05:03 - Mikah Sargent

This thing's big, like there's no questioning that this thing, is probably has cameras on it and maybe that's part of it, because we don't wanna be tricked right as human beings, we don't want someone pulling one over on us. So I can see this big contraption and just avoid that person, whereas maybe with the Google Glass it was a little bit like are you sneakily recording? Yeah, are you getting away with something right now? What is that? What are you doing there? But I see that and I'm like I'm not getting in the elevator with that person. You can watch your cartoon, yeah, you do you, you do you.

1:05:33 - Jason Howell

So anyways, I don't know that I'm going to be walking around in the world with a Quest 3 anytime soon, but I will say some of the pass-through video that was recorded kind of act as a more updated view of what augmented reality combined with a face computer could be when things are miniaturized and again we get into that weird murky world of okay, well then our people are gonna be weirded out when it's miniaturized and looks like everything else. That's another thing. But I mean Apple does have its Vision Pro right Vision Pro coming, and I don't know if maybe this is kind of like the beginning of the next phase.

1:06:15 - Mikah Sargent

I was gonna say very much the beginning, because they've never shown it as this thing you're wearing out and about in all of the product photography they very much. It's like you're at home, it's controlled, you're at work and I think but people are gonna do it. Yeah, oh, absolutely they're gonna do it. I think what's cool about this, though, is this is quite literally the start of what I hope is the next jump, in a way, which is that no longer are our interfaces constrained to this specific physical object, that I can have a little phone in my peripheral, or I can have a big old screen if I want to. If I'm just sitting at the doctor's office waiting, now, suddenly right there I've got this gigantic screen to watch something if I want to, or do some work while I'm walking, doctor Watson like hey, hello.

Mikah I'm here, or you're taking an Uber somewhere and you're just sitting there and now you've got this opportunity. It's just, I think, that that concept is the next jump, but I think I am going to wait to be walking around with something like this. No, it's gotta be something small and not this.

1:07:26 - Jason Howell

Yeah, not this, Although Meta does have a thing that's small and not that the Meta Ray Bands. They're a very different product. They're not quite the same thing, but there you go there.

You go Meta Glassels everywhere. All right, we've reached the end of this episode of Tech News Weekly. Thank you so much for watching and listening, as we hope you do each and every week, because you subscribe right, and if you don't, you should Twittertv slash TNW. Go there, you'll find all the ways. Or just you know what, just open up your podcatcher, which we know you all have, and just do a quick search for TNW or Tech News Weekly. Actually Do a search for that and you'll find us. Subscribe there.

1:08:03 - Mikah Sargent

If you would like to, you can get all of our shows ad free. And it's another way to help support by joining club Twitter at Twittertv slash club twit. When you join the club at $7 a month or $84 a year you will get every single Twitch show with no ads, just the content, because you, in effect, are supporting the show. You'll also gain access to the twit plus bonus fee that has extra content you won't find anywhere else behind the scenes before the show, after the show, as well as special events. So it's great. Once you subscribe, once you become a member, you will get access to a huge back catalog of great events that have happened, plus access to the members only discord Server, a fun place to go to chat with your fellow club twit members and also those of us here at twit. We've got some great events coming up, including, of course, in just a few minutes well, several minutes AI inside, but later we've got the home theater geeks recording going on as well as next Thursday, and escape room here at twit, where several of us from twit will be trying to so basically solve a giant puzzle box as the conceit of the escape room, when it's a portable system, but it should be a lot of fun. I know I'll be wearing some sort of costume for that, and I think others will too. Who knows, show up and I might be the only one wearing a costume, and that's funny in and of itself. Anyway, along with all of that great stuff, you also get some club twit, exclusive shows, including the untitled Linux show, a show all about Linux. Hands on windows, which is a short format show from Paul Therat that covers Windows Tips and Tricks. Hands on Mac, which is a short format show that covers Apple tips and tricks from yours truly. Home theater geeks that I just mentioned, with Scott Wilkinson, which is a great show covering all things home theater interviews, reviews, questions answered, all sorts of fun stuff and, as I also mentioned, ai inside, with Jason Howell Talking all about artificial intelligence.

Oh yeah, again, twittv slash, club twit to join the club and get that warm fuzzy feeling, knowing that you are helping us continue to make these shows for you. Now, if you would like to tweet at me, or, I should say, if you'd like to follow me online, I'm at Mikah Sargent on many social media network, or you can head to chiwawacoffeechii hua huacoffee, where I've got links to the places I'm most active online. Check out Hands On Mac later today. Check out Ask the Tech Guys on Sundays, which is a show I host with Leo Laporte, where we take your questions live on air and do our best to answer them. And on Tuesdays, you can watch iOS today with Rosemary Orchard and yours truly, where we talk all things Apple and its various operating systems. Jason Howell, what about you?

1:10:53 - Jason Howell

Well, you can find me doing, well, as Mikah said, ai inside. That's my other major show right now and we're really hitting our groove as far as that's concerned. Now we just need to get some music and official title and some graphics and everything and make it, you know, carve it into stone essentially. But until that happens, we're gonna continue doing it every week. So, and if you're outside of the club, you can watch us record this live to get a sense of what's happening inside the club. So every 1 pm Pacific, actually right after the recording of this show, every Thursday at 1 pm Pacific.

And today I'll be with Mike Wolfson, a friend of mine from the Android Realm we're gonna do an explainer on transformers and kind of the relationship of transformers in the technology of artificial intelligence and everything. So it's kind of a way to learn a little bit more about AI behind the scenes. And then you know producing some of Leo's shows, twig Security, now that sort of stuff as well. But find me, you know, all over the social media map. I think, micah, I think I'm gonna do the thing that you did. I think I'm gonna your chihuahuacoffee, whatever that service is that that points to. I think I need to do that.

We're just at a point to where these things are spread.

So so many different places, so I could spend a million hours, you know, telling you where to follow me, or I could just say go there. So I'm gonna do that, hopefully by next week, and then I'll say that here instead of a minute talking about what I'm going to do. Thank you to everyone who helped us do the show each and every week. We've got John Salanina, john Ashley, we've got everyone behind the scenes Burke testing people behind the scenes and thanks to you for watching and listening each and every week and supporting us. We do appreciate you. We'll see you next time on Tech News Weekly. Bye-bye, bye, everybody.

1:12:36 - Lou Marseca

Come join us on This Week in Enterprise Tech. Tech expert co-hsots and I talk about the enterprise world and we're joined by industry professionals and trailblazers like CEOs, cios, ctos he says every acronym role plus IT pros and marketeers. We talk about technology, software plus services, security you name it everything under the sun. You know what? I learned something each and every week and I bet you you will too. So definitely join us and, of course, check out the twit.tv website and click on This Week in Enterprise Tech to subscribe today.